Why AI Models Break When Metadata Is Incomplete

Missing lead metadata breaks AI segmentation and scoring, causing B2B outbound teams to target the wrong accounts and roles.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

12/15/20253 min read

In B2B lead systems, AI sits upstream of almost everything that matters. It’s used to segment lead lists, score accounts, route leads to sales, and decide who gets contacted first.

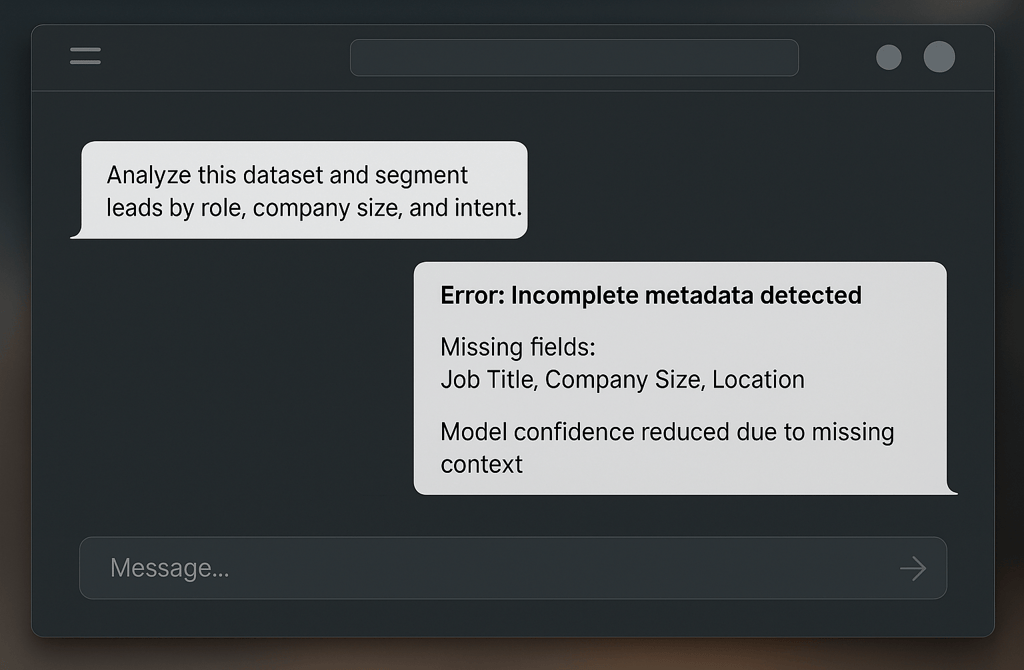

When metadata is incomplete, those systems don’t stop working. They keep running — and that’s the problem.

Instead of throwing errors, AI models quietly misclassify leads, dilute ICP targeting, and push outbound teams toward the wrong accounts. By the time performance drops, the damage is already baked into the data.

1. B2B AI depends on structured lead metadata to function

AI models used in B2B lead operations rely on structured fields to understand who a lead is.

Job title, department, seniority, company size, industry, and geography are not optional. They define how leads are grouped, compared, and prioritized.

When those fields are missing or inconsistent, the model still produces output. But segmentation logic breaks. A manager gets treated like an IC. A startup looks like mid-market. A regional buyer is grouped with global accounts.

The system is operational — but it’s no longer accurate.

2. Incomplete metadata breaks ICP segmentation

Most B2B AI workflows assume ICP rules are enforced at the data layer.

When company size is missing, AI can’t reliably separate SMB from enterprise. When titles are incomplete, it can’t distinguish decision-makers from influencers. When departments are unclear, routing logic fails.

Instead of precise segments, the model starts grouping leads based on weak proxies. Over time, ICP boundaries blur and outbound lists lose focus.

This is how teams end up “doing outreach” without actually targeting.

3. Lead scoring becomes confident and wrong

AI lead scoring doesn’t pause when context is missing. It recalculates.

With incomplete metadata, models lean on historical averages and partial signals. Scores stabilize because patterns repeat — not because they’re correct.

Sales teams trust the numbers because they look consistent. But replies drop, conversations stall, and deals don’t materialize. The issue isn’t messaging or cadence. It’s that the model never had enough context to score leads properly.

Confidence increases. Accuracy declines.

4. Errors compound as leads move downstream

Metadata failures don’t stay isolated.

Once a lead is misclassified, every downstream system inherits the mistake. Segmentation feeds scoring. Scoring feeds routing. Routing feeds outbound sequences.

By the time an SDR notices something is wrong, the problem looks like poor performance instead of bad data. Teams tweak copy, adjust sequences, or blame timing — while the real issue sits upstream in missing metadata.

AI didn’t cause the failure. It amplified it.

5. AI cannot recover context that B2B data never captured

No model can infer what isn’t there.

If a lead record lacks seniority, department, or accurate company size, AI has nothing to reason with. Prompting harder, retraining models, or adding logic layers won’t fix missing structure.

In B2B lead systems, metadata completeness sets the ceiling for AI effectiveness. When inputs are incomplete, automation guarantees scale — not relevance.

Final thought

AI doesn’t break loudly in B2B lead systems. It breaks quietly when metadata is missing.

Complete lead metadata keeps AI segmentation and scoring aligned with reality.

Incomplete context is what turns outbound automation into confident misdirection.

Related Posts

How Company Health Signals Predict Your Cold Email Success

The Data Behind High-Intent Prospects Most People Miss

Why Industry Fit Decides Whether Your Cold Email Works

What Pipeline-Ready Lead Data Actually Looks Like

The Silent Impact of Company Size Accuracy in Outreach

Why Titles & Departments Matter More Than Personalization

The Hidden Patterns in Leads That Always Reply

Why Some Industries Email Back Faster Than Others

How Buying Behavior Data Influences Outbound Results

The Real Meaning of Verified in B2B Lead Data

Why Multi-Signal Data Outperforms Basic Email Lists

The Micro-Data Points That Predict Reply Probability

Why Data Completeness Makes Outbound Easier to Scale

How Company Lifecycle Stage Impacts Deliverability

The Common Data Gaps That Break Founder-Led Outreach

The Hidden Indicators That Tell You a Lead Is Worth Emailing

Why Mapping the Buying Committee Boosts Reply Rates

Why AI Needs Clean Inputs to Improve Lead Accuracy

The Hidden AI Errors Caused by Dirty Data

How AI Enhances Lead Processing Without Replacing Humans

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.