The Hidden AI Errors Caused by Dirty Data

Dirty data introduces silent errors into AI systems, causing misclassification, false confidence, and unreliable outputs long before teams notice the damage.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

12/15/20252 min read

AI systems rarely fail loudly.

They don’t crash, throw obvious errors, or stop working when something is wrong. Instead, they keep running — quietly producing outputs that look correct while accuracy degrades underneath.

That’s what makes dirty data dangerous in AI-assisted systems. The damage is subtle, cumulative, and often invisible until results collapse.

1. Dirty Data Introduces Silent Misclassification

AI relies on patterns across fields, not individual records.

When job titles, departments, company sizes, or locations are inconsistent, the system still classifies them — just incorrectly. A role gets placed in the wrong bucket. A company gets grouped into the wrong segment. A contact inherits the wrong intent profile.

Nothing looks broken. But relevance is already compromised.

2. AI Confidence Increases Even When Accuracy Drops

One of the most overlooked AI errors is false confidence.

As dirty data flows through the system, models still generate scores, rankings, and predictions. Over time, these outputs become more confident because the system sees repetition — even when the repetition is wrong.

Teams trust the output because it’s consistent, not realizing the consistency is built on flawed inputs.

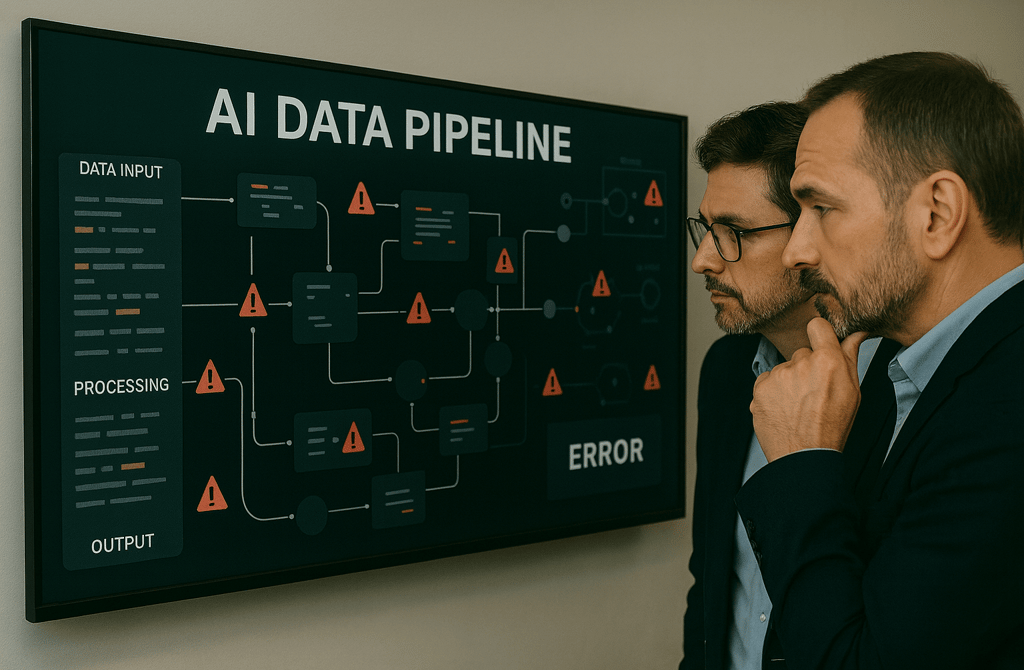

3. Errors Compound Across the Entire Pipeline

Dirty data doesn’t stay isolated.

Once an incorrect classification enters the pipeline, it affects:

scoring models

prioritization logic

filtering rules

downstream automation

A single bad assumption can ripple through enrichment, validation, and outreach layers, multiplying the impact of one small data issue.

4. AI Can’t Distinguish Noise From Signal Without Guardrails

AI is excellent at detecting patterns — but only when boundaries are clear.

When inputs are noisy, the system struggles to separate meaningful signals from random variation. It treats outdated roles, mismatched departments, and conflicting sources as legitimate data points.

Without clean inputs, AI learns the wrong lessons.

5. Dirty Data Breaks Human Review Loops

Many teams rely on human oversight to catch AI mistakes.

But when the underlying data is inconsistent, reviewers spend their time interpreting records instead of validating decisions. Human review becomes slower, more expensive, and less effective — which defeats the purpose of AI assistance.

Clean data is what makes human-in-the-loop systems viable at scale.

6. Most AI Errors Originate Before Processing Begins

When AI performance degrades, teams often blame:

the model

the algorithm

the scoring logic

In reality, most failures originate upstream — before AI processing even starts. Dirty data creates hidden errors long before models ever touch the information.

Fixing the model doesn’t fix the input problem.

Final Thought

AI doesn’t expose dirty data immediately.

It hides the damage behind polished outputs and confident scores.

The most dangerous AI errors aren’t obvious failures — they’re quiet inaccuracies that scale unnoticed.

Clean data makes outbound predictable because AI amplifies the right signals.

Dirty data causes AI systems to scale errors faster than any human process ever could.

Related Posts

How Company Health Signals Predict Your Cold Email Success

The Data Behind High-Intent Prospects Most People Miss

Why Industry Fit Decides Whether Your Cold Email Works

What Pipeline-Ready Lead Data Actually Looks Like

The Silent Impact of Company Size Accuracy in Outreach

Why Titles & Departments Matter More Than Personalization

The Hidden Patterns in Leads That Always Reply

Why Some Industries Email Back Faster Than Others

How Buying Behavior Data Influences Outbound Results

The Real Meaning of Verified in B2B Lead Data

Why Multi-Signal Data Outperforms Basic Email Lists

The Micro-Data Points That Predict Reply Probability

Why Data Completeness Makes Outbound Easier to Scale

How Company Lifecycle Stage Impacts Deliverability

The Common Data Gaps That Break Founder-Led Outreach

The Hidden Indicators That Tell You a Lead Is Worth Emailing

Why Mapping the Buying Committee Boosts Reply Rates

Why AI Needs Clean Inputs to Improve Lead Accuracy

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.