Why Framework Experiments Fail When Lists Aren’t Fresh

Cold email frameworks don’t fail because of structure — they fail when stale lead lists distort testing results. Here’s how data recency quietly breaks outbound experiments.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

2/13/20263 min read

A framework rarely “stops working” overnight.

What usually stops working is the list underneath it.

Teams change subject lines. They adjust CTAs. They restructure their opening lines. They rewrite entire sequences. Then they compare reply rates and conclude that version A beats version B.

But if the lead list feeding those experiments has already aged, the test itself is contaminated before it even starts.

You’re not testing framework strength.

You’re testing how decay interacts with messaging.

Framework Testing Assumes Stable Inputs

Every outbound experiment relies on one quiet assumption:

The audience remains consistent while the message changes.

That assumption collapses the moment your list isn’t fresh.

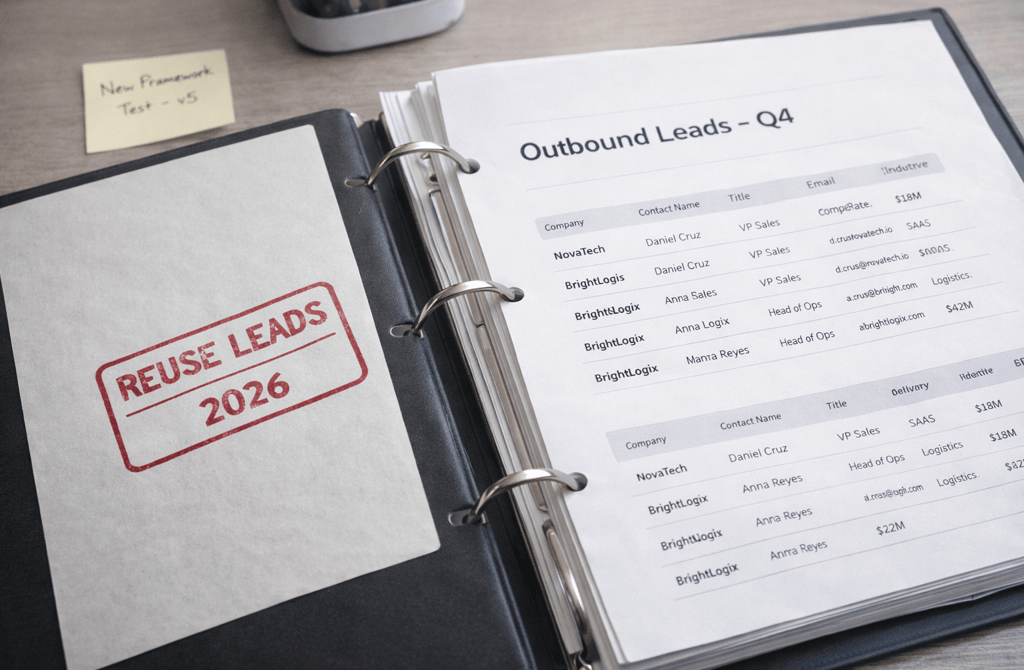

When contacts change roles, companies shift priorities, or departments restructure, the ICP alignment shifts with them. If you launch “Framework v5” on a list that hasn’t been validated recently, you are no longer comparing structure versus structure. You are comparing structure against decay.

And decay always wins the confusion game.

Data Aging Distorts Experimental Results

Here’s what happens when you experiment on stale lists:

Reply rates fall, and you assume the hook is weak.

Opens flatten, and you blame subject lines.

Click-through drops, and you assume offer mismatch.

But aged data introduces silent variables:

Contacts who no longer hold decision power.

Departments that have been reorganized.

Emails technically deliverable but no longer relevant.

The result is statistical noise disguised as performance feedback.

You think you’re running controlled tests.

In reality, you’re testing against a moving target.

Recency Changes Engagement Probability

Fresh data behaves differently.

Recently validated contacts tend to:

Reflect current org structure.

Align with up-to-date role responsibilities.

Sit within accurate company size and revenue brackets.

Match the lifecycle stage you intended to target.

That stability produces clearer signal patterns. When a framework works on fresh lists, reply behavior is more predictable. When it fails, the failure is structural — not environmental.

That distinction matters.

Without recency discipline, every test result becomes ambiguous. Was it the copy? The CTA? Or the fact that 20% of the list has drifted out of ICP fit?

Framework Illusions Are Expensive

The biggest cost isn’t lower reply rate.

It’s misdiagnosis.

When teams assume the framework is broken, they:

Rewrite sequences prematurely.

Add unnecessary follow-ups.

Split test endlessly.

Each iteration burns sending capacity and attention.

But the real issue sits upstream: list freshness.

If the input quality erodes, output stability erodes with it. And no amount of structural optimization fixes that.

Why Fresh Lists Create Clearer Performance Patterns

Freshness does three things for experimentation:

It reduces ICP drift.

It minimizes role misalignment.

It stabilizes reply probability curves.

When those variables are controlled, framework adjustments become measurable. You can isolate subject lines. You can isolate CTA shifts. You can isolate value proposition framing.

Your experiments start producing signal instead of noise.

And signal is what allows outbound to scale intelligently.

The Real Takeaway

Framework testing only works when the audience layer is stable.

If your list hasn’t been validated recently, your experiment is already biased. You’re optimizing structure on top of decaying foundations.

Strong messaging depends on accurate targeting.

When targeting drifts, structure becomes unpredictable.

Related Post:

How Risky Email Patterns Reveal Broken Data Providers

How Industry Structure Influences Email Risk Levels

Why Certain Sectors Experience Faster Data Decay Cycles

The Hidden Validation Gaps Inside Niche Industry Lists

How Industry Turnover Impacts Lead Freshness

Why Validation Complexity Increases in Specialized Markets

How Revenue Misclassification Creates Fake ICP Matches

Why Geo Inaccuracies Lower Your Reply Rate

The Size Signals That Predict Whether an Account Is Worth Targeting

How Bad Location Data Breaks Personalization Attempts

Why Company Growth Rates Matter for Accurate Targeting

Why Testing B2B Lead Data Matters Before You Buy

How Department Shifts Impact Your Cold Email Results

Why Title Ambiguity Creates Hidden Pipeline Waste

The Hidden Problems Caused by Outdated Job Roles

How Poor Infrastructure Amplifies Minor Data Issues

Why Weak Architecture Triggers Spam Filters Faster

The Domain Reputation Mechanics Founders Should Understand

How Spam Algorithms Interpret Sudden Send Volume Changes

Why Inconsistent Targeting Raises Spam Filter Suspicion

The Inbox Sorting Logic ESPs Never Explain Publicly

How Risky Sending Patterns Trigger Domain-Level Penalties

Why Domain Reputation Is Built on Consistency, Not Volume

The Hidden Domain Factors That Influence Inbox Placement

Why Copy Tweaks Don’t Fix Underlying Data Problems

The Hidden Data Requirements Behind High-Performing Frameworks

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.