The Hidden Data Requirements Behind High-Performing Frameworks

High-performing cold email frameworks depend on clean data, precise targeting, and accurate validation. Here’s the data layer most teams ignore when optimizing copy and sequences.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

2/13/20264 min read

There’s a version of this story that gets told at every outbound meetup.

“We changed the framework. Replies doubled.”

What rarely gets mentioned is what happened before that framework started working.

Because high-performing cold email frameworks don’t operate in isolation. They sit on top of invisible conditions. And when those conditions aren’t met, the same “proven” sequence that wins in one account completely flattens in another.

The difference isn’t creativity. It’s data readiness.

Frameworks Don’t Create Alignment — They Amplify It

A strong outbound framework does three things well:

Establish relevance

Reduce friction

Create forward motion

But none of those matter if the underlying targeting is off by even a small margin.

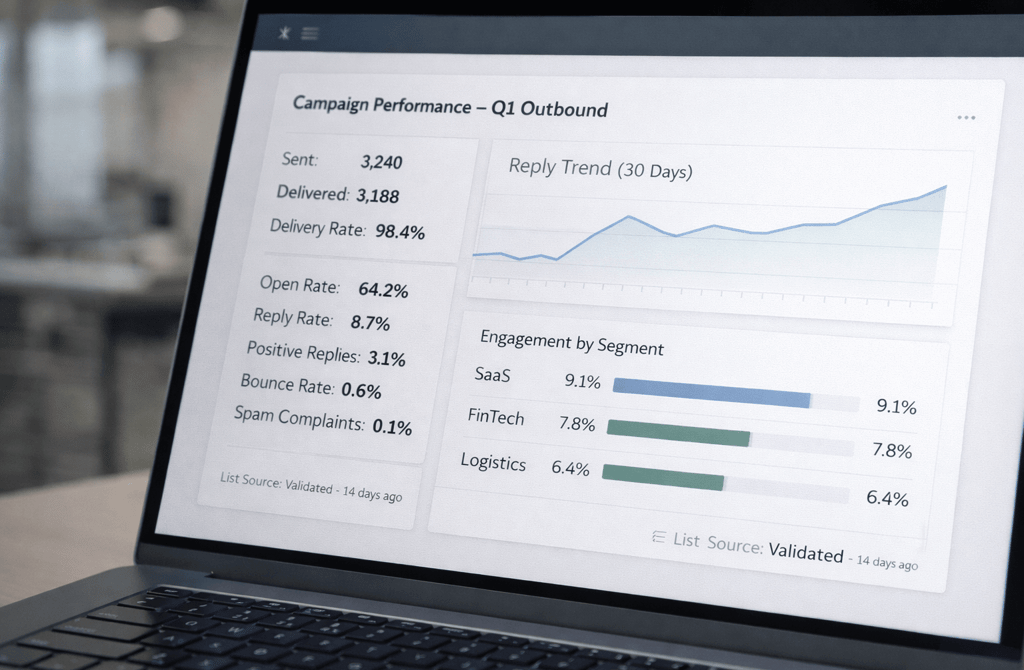

When the ICP definition is tight, role accuracy is precise, and contact recency is controlled, frameworks feel sharp. They generate predictable reply behavior. Engagement looks stable.

When those layers drift, the same framework suddenly feels “burned.”

Not because prospects got smarter.

Because the alignment signal weakened.

Frameworks don’t manufacture fit. They amplify whatever fit already exists in the data.

The Stability Threshold Most Teams Ignore

There’s a hidden threshold where frameworks start working consistently.

It usually includes:

Validation cycles within a tight recency window

Role and department precision with minimal ambiguity

Company size segmentation that reflects actual buying power

Bounce stability under 1%

Once those thresholds are met, performance smooths out. Metrics stop swinging wildly between batches. Reply curves become more consistent.

Below that threshold, framework performance becomes volatile.

You can test ten variations and see ten different outcomes — not because the copy is dramatically different, but because the data underneath is inconsistent.

Framework iteration without data stability is noise.

Why Frameworks “Suddenly Stop Working”

This is one of the most common complaints in outbound:

“This framework used to work. Now it doesn’t.”

What changed?

Often, nothing about the message.

What changed is:

Job role movement inside the target segment

ICP creep from broadening filters

Aged contact data slipping into exports

Multi-source blending introducing small conflicts

Those shifts are subtle. But they compound.

A framework that once matched the buyer’s pain precisely now hits adjacent roles. Or reaches contacts who no longer own the decision.

The drop in reply rate gets attributed to fatigue.

But fatigue is rarely the real cause.

Misalignment is.

High-Performing Frameworks Depend on Controlled Variables

Framework testing assumes that everything else is stable.

But if:

List segments aren’t normalized

Recency windows vary between sends

Different enrichment depths are mixed together

Role hierarchies are inconsistently mapped

Then you aren’t testing framework quality.

You’re testing unstable data conditions.

This is why some teams think they’re optimizing copy when they’re actually chasing data inconsistencies.

The more unstable the input, the more misleading the learning.

Data Completeness Shapes Message Depth

High-performing frameworks often rely on:

Clear seniority mapping

Department-specific language

Industry nuance

Accurate company growth signals

Without complete metadata, frameworks become generic by necessity.

You can’t write role-aware messaging if title precision is weak.

You can’t tailor outreach by lifecycle stage if revenue data is misclassified.

You can’t reference operational pain if industry tagging is broad.

When data completeness increases, frameworks naturally become sharper.

When it decreases, frameworks flatten.

Copy didn’t lose power.

Context disappeared.

Deliverability Is the Silent Requirement

There’s another hidden dependency: inbox stability.

High-performing frameworks assume:

Healthy domain reputation

Stable bounce rates

Positive engagement clusters

Consistent sending cadence

If data quality declines, engagement signals weaken. That affects placement. Lower placement affects visibility. Lower visibility affects replies.

And once again, the framework gets blamed.

But no sequence performs well when inbox placement erodes quietly behind the scenes.

Data hygiene protects not only targeting accuracy — it protects the conditions required for frameworks to be evaluated fairly.

The Illusion of Copy-Led Success

When a framework produces strong results, it’s tempting to attribute success entirely to structure.

But structure is only the visible layer.

Underneath it are:

Validation discipline

Segmentation precision

ICP clarity

Role accuracy

Recency control

Infrastructure stability

Remove those supports, and the framework collapses.

Maintain them, and even modest copy can perform reliably.

Frameworks feel powerful because they’re visible.

Data foundations feel boring because they’re quiet.

But performance lives in the quiet layer.

What This Means

If you’re testing framework after framework without consistent improvement, don’t assume creativity is the bottleneck.

Audit the hidden requirements:

Is the segment stable week to week?

Are roles clean and mapped correctly?

Is recency tightly controlled?

Are bounce levels stable?

Is enrichment complete enough to support personalization depth?

High-performing frameworks aren’t magic formulas. They’re structures that only work when the data beneath them is stable.

Strong frameworks accelerate results when the inputs are disciplined.

Weak data turns even the best framework into a guessing game.

Related Post:

How Risky Email Patterns Reveal Broken Data Providers

How Industry Structure Influences Email Risk Levels

Why Certain Sectors Experience Faster Data Decay Cycles

The Hidden Validation Gaps Inside Niche Industry Lists

How Industry Turnover Impacts Lead Freshness

Why Validation Complexity Increases in Specialized Markets

How Revenue Misclassification Creates Fake ICP Matches

Why Geo Inaccuracies Lower Your Reply Rate

The Size Signals That Predict Whether an Account Is Worth Targeting

How Bad Location Data Breaks Personalization Attempts

Why Company Growth Rates Matter for Accurate Targeting

Why Testing B2B Lead Data Matters Before You Buy

How Department Shifts Impact Your Cold Email Results

Why Title Ambiguity Creates Hidden Pipeline Waste

The Hidden Problems Caused by Outdated Job Roles

How Poor Infrastructure Amplifies Minor Data Issues

Why Weak Architecture Triggers Spam Filters Faster

The Domain Reputation Mechanics Founders Should Understand

How Spam Algorithms Interpret Sudden Send Volume Changes

Why Inconsistent Targeting Raises Spam Filter Suspicion

The Inbox Sorting Logic ESPs Never Explain Publicly

How Risky Sending Patterns Trigger Domain-Level Penalties

Why Domain Reputation Is Built on Consistency, Not Volume

The Hidden Domain Factors That Influence Inbox Placement

Why Copy Tweaks Don’t Fix Underlying Data Problems

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.