The Upstream Errors That Create Downstream Pipeline Damage

Small upstream data errors quietly erode pipeline health, distorting targeting, scoring, and deal progression long before revenue drops.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/18/20263 min read

Pipeline damage rarely starts where teams look for it.

Deals don’t stall because reps suddenly forget how to sell.

Pipelines don’t thin out because follow-ups weren’t sent.

Forecasts don’t miss because one stage was mismanaged.

They break because upstream data errors quietly shape every downstream decision.

By the time pipeline damage is visible, the cause is already buried.

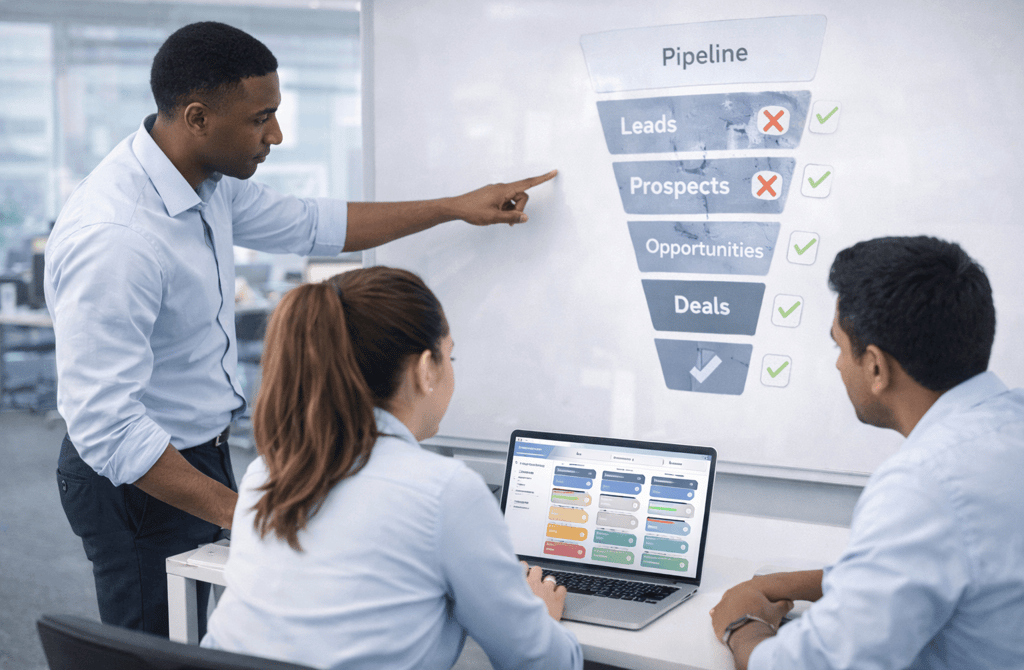

Pipeline Is an Output, Not a Control Lever

Most teams treat pipeline like something you fix directly:

improve handoffs

adjust stages

tighten qualification

add volume at the top

But pipeline isn’t a system you control. It’s a result of upstream inputs behaving correctly—or not.

If upstream data is flawed, pipeline accuracy becomes an illusion. You can clean stages all day and still be working with corrupted flow.

How Damage Actually Travels Downstream

Upstream errors don’t break things immediately. They reshape flow.

Here’s what usually happens:

Accounts are enrolled that shouldn’t be there

Early engagement looks “fine” but isn’t buying-intent

Opportunities appear but don’t progress

Late-stage pipeline thins unexpectedly

Nothing looks broken at the start.

The damage only becomes obvious after resources are already spent.

That’s why teams experience pipeline issues as surprises instead of signals.

Why Downstream Fixes Never Hold

When damage shows up late, teams respond late:

revise qualification rules

redefine stages

pressure SDRs

tweak scoring thresholds

These actions address symptoms, not causes.

If upstream data is still misaligned, the system simply recreates the same damage with new labels. Pipeline looks cleaner temporarily, then degrades again.

This is how teams get stuck in endless “pipeline hygiene” cycles.

The Silent Role of Data Dependencies

Every pipeline stage depends on assumptions made earlier:

who was targeted

why they were included

which fields defined fit

how recency was interpreted

When any of those assumptions are wrong, downstream pipeline stages inherit the error without knowing it.

The pipeline doesn’t scream. It slowly loses integrity.

Why Teams Misjudge the Root Cause

Pipeline damage feels operational, so it gets operational fixes.

But the root cause is almost always data dependency failure, not execution failure.

The system did exactly what it was told to do—just based on inputs that no longer reflected reality.

That’s why experienced teams still get caught off guard. The system is functioning. It’s just functioning on bad premises.

Cleaning Pipeline Starts Before the Funnel

Teams that stabilize pipeline long-term don’t obsess over stages first. They work upstream:

validating which fields drive inclusion

tightening dependency-critical data

aligning refresh cycles with send cycles

auditing which inputs shape deal flow

They treat pipeline as a downstream signal, not a steering wheel.

What This Means

Pipeline damage is rarely a late-stage problem.

It’s an upstream data problem that reveals itself late.

When upstream inputs stay aligned, pipeline behaves predictably.

When they don’t, no amount of downstream cleanup can compensate.

That’s the difference between managing pipeline—and actually protecting it.

Related Post:

Why Multi-Source Data Blending Beats Single-Source Lists

The Conflicts That Arise When You Merge Multiple Lead Sources

How Cross-Source Validation Improves Data Reliability

Why Data Blending Fails When Metadata Isn’t Aligned

The Hidden Errors Inside Aggregated Lead Lists

Why Bad Data Creates Massive Hidden Operational Waste

The Outbound Tasks That Multiply When Data Is Wrong

How Weak Lead Quality Increases SDR Workload

Why Founders Waste Hours Fixing Data Problems

The Operational Drag Caused by Inconsistent Metadata

Why RevOps Fails Without Strong Data Foundations

The RevOps Data Flows That Predict Outbound Success

How Weak Data Breaks RevOps Alignment Across Teams

Why Revenue Models Collapse When Metadata Is Inaccurate

The Hidden RevOps Data Dependencies Embedded in Lead Quality

Why Automation Alone Can’t Run a Reliable Outbound System

The Decisions Automation Gets Wrong in Cold Email

How Human Judgment Fixes What Automated Tools Misread

Why Fully Automated Outreach Creates Hidden Risk

The Outbound Decisions That Still Require Human Logic

Why Outbound Systems Fail When Data Dependencies Break

The Chain Reactions Triggered by Weak Data Inputs

How One Bad Field Corrupts an Entire Outbound System

Why Data Dependencies Matter More Than Individual Signals

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.