The Decisions Automation Gets Wrong in Cold Email

Automation executes cold email fast, but it often misjudges context, timing, and lead quality. Here’s where automated decisions break down.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/17/20263 min read

Automation didn’t break cold email.

It exposed where humans stopped thinking.

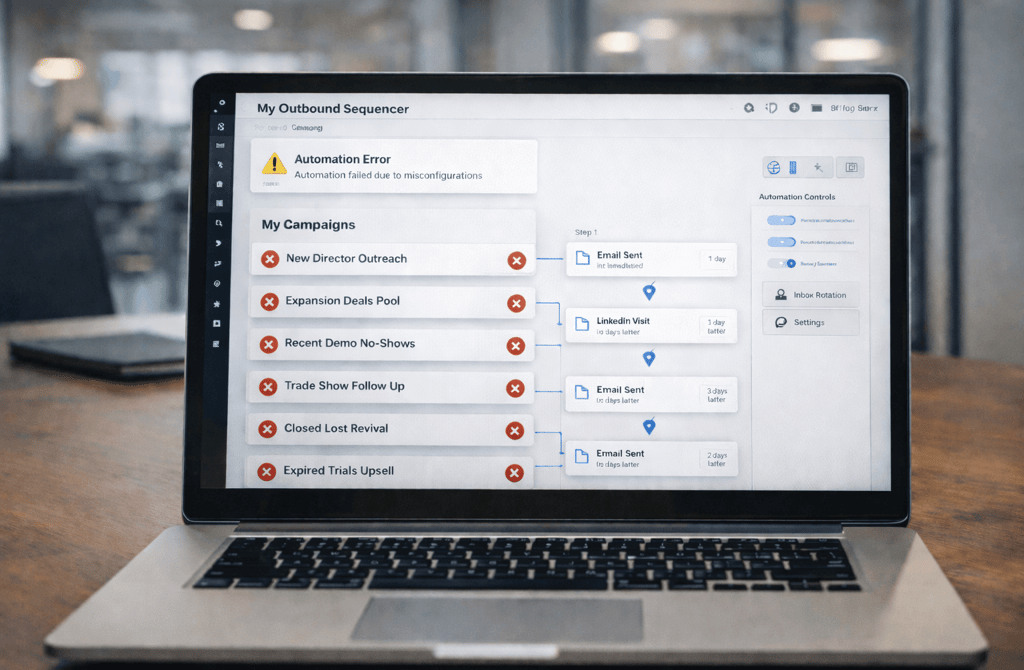

Most outbound failures blamed on “automation” aren’t caused by the tools themselves. They’re caused by delegating decisions that should never have been automated in the first place. Sending is easy to automate. Judgment isn’t.

Cold email is a chain of small decisions stacked together. When even one of those decisions is made without context, the entire sequence looks “fine” on the dashboard—and quietly fails in reality.

Here’s where automation consistently gets it wrong.

1. Who Should Be Emailed Right Now

Automation excels at following rules.

It fails at understanding timing.

Most sequencers decide eligibility based on static fields: title, industry, company size. What they can’t see is whether the contact is in a moment where outreach makes sense. Job changes, internal reshuffles, budget freezes, or hiring pauses all create windows where automation confidently sends—and misses.

This is why teams see “delivered” emails that never convert. The system isn’t wrong technically. It’s wrong contextually.

2. Which Signals Actually Matter

Automated systems treat all signals as equal once they’re ingested.

Humans don’t.

A dashboard might show opens, clicks, and engagement history. What it doesn’t know is why those signals happened. Was it curiosity? Accidental opens? A forwarded message? A spam-filter preview?

Automation reacts to surface behavior. Humans interpret intent.

When automated decisions prioritize the wrong signals, follow-ups accelerate in the wrong direction—more emails to people who were never viable to begin with.

3. When to Stop Sending

One of the most damaging automation mistakes is knowing when not to continue.

Sequencers are designed to complete sequences. Humans are designed to notice resistance. Silence after multiple touches, repeated soft bounces, or passive disengagement are all cues to stop. Automation sees these as “incomplete workflows.”

This is how inbox providers learn to distrust senders. Not because of one bad email—but because systems kept pushing after the signal was already clear.

4. Role Interpretation

Automation assumes titles are stable.

Reality isn’t.

“Head of Ops” in one company can mean buyer. In another, it’s an execution role with no purchasing authority. Automated decisions treat titles as deterministic inputs. Humans recognize nuance—company maturity, reporting structure, and functional overlap.

When automation guesses wrong here, personalization becomes noise. The email might look tailored, but it lands as irrelevant.

5. List Readiness

Perhaps the biggest decision automation gets wrong is assuming a list is ready because it passed a technical check.

Syntax validation doesn’t equal relevance. Deliverable doesn’t mean appropriate. Recently verified doesn’t mean aligned.

Automation moves lists forward because they meet minimum criteria. Humans pause and ask whether the list should be used at all.

That pause is often the difference between a stable campaign and one that slowly degrades domain trust.

Why This Keeps Happening

Automation tools are built to optimize throughput.

Outbound success depends on restraint.

The mistake isn’t using automation—it’s automating judgment prematurely. Cold email isn’t a manufacturing line. It’s closer to risk management. Every decision compounds.

When humans stay involved at the decision points—who, when, why, and when to stop—automation becomes powerful. When they step away entirely, the system keeps running even as outcomes decline.

What This Means

If your outbound feels unpredictable, the issue usually isn’t volume or copy. It’s decision ownership. Automation should execute decisions humans have already validated—not replace them.

Cold email breaks down when tools are asked to think instead of assist.

Bottom Line

Reliable outbound isn’t built by removing humans from the loop.

It’s built by deciding where humans must stay involved.

Campaigns succeed when decision quality stays high before emails ever go out.

When contact data is current and context-aware, automation amplifies results—when it isn’t, it quietly compounds mistakes.

Related Post:

Why Company Lifecycle Stage Dictates Cold Email Outcomes

The Lifecycle Signals That Reveal Real Buying Readiness

How Early-Stage Companies Respond Differently to Outbound

Why Growth-Stage Accounts Require More Precise Targeting

The Hidden Data Problems Inside Mature Companies

Why Multi-Source Data Blending Beats Single-Source Lists

The Conflicts That Arise When You Merge Multiple Lead Sources

How Cross-Source Validation Improves Data Reliability

Why Data Blending Fails When Metadata Isn’t Aligned

The Hidden Errors Inside Aggregated Lead Lists

Why Bad Data Creates Massive Hidden Operational Waste

The Outbound Tasks That Multiply When Data Is Wrong

How Weak Lead Quality Increases SDR Workload

Why Founders Waste Hours Fixing Data Problems

The Operational Drag Caused by Inconsistent Metadata

Why RevOps Fails Without Strong Data Foundations

The RevOps Data Flows That Predict Outbound Success

How Weak Data Breaks RevOps Alignment Across Teams

Why Revenue Models Collapse When Metadata Is Inaccurate

The Hidden RevOps Data Dependencies Embedded in Lead Quality

Why Automation Alone Can’t Run a Reliable Outbound System

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.