The Hidden Errors Inside Aggregated Lead Lists

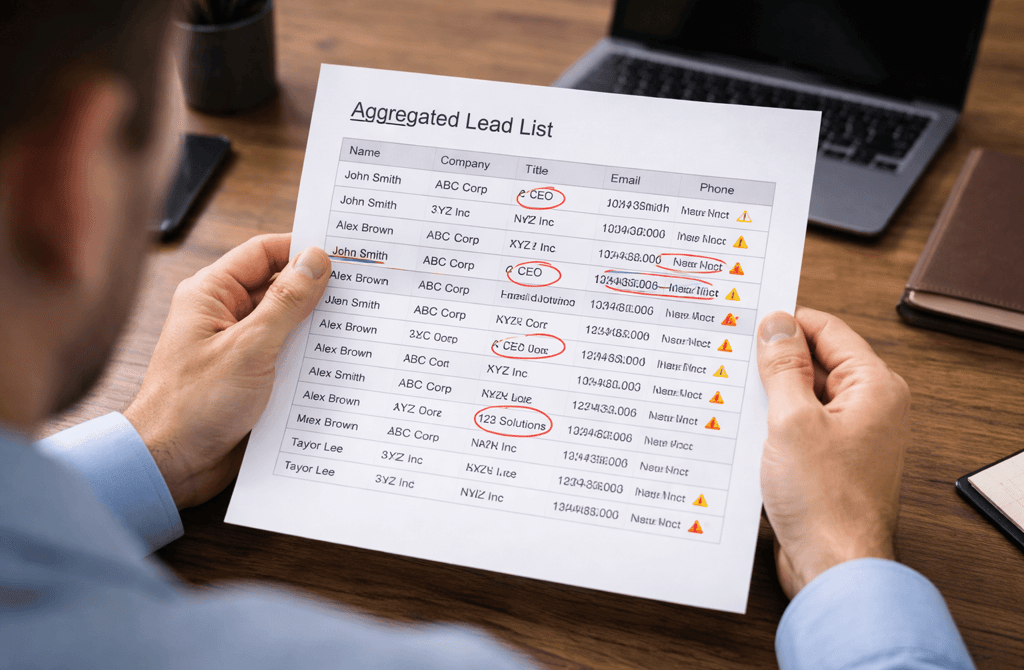

Aggregated lead lists often hide structural errors that surface only during outreach. Learn where these issues come from and why they quietly undermine campaigns.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/14/20263 min read

Most aggregated lead lists don’t look broken.

They look complete.

Rows are filled. Fields are populated. Volumes look impressive. On the surface, nothing appears wrong—which is exactly why aggregated lists are dangerous. Their biggest failures don’t announce themselves early. They surface only after outreach begins, when mistakes are already expensive.

Aggregation doesn’t create bad data.

It hides accountability for bad data.

Aggregation shifts responsibility away from accuracy

When data comes from a single source, errors tend to be consistent. You learn the source’s patterns quickly. Titles drift in predictable ways. Company size may be inflated or understated, but at least it’s systematic.

Aggregation breaks that consistency.

Each source brings its own definition of accuracy, freshness, and completeness. When those records are merged, no single logic governs the final list. Errors don’t cancel each other out—they compound quietly.

What looks like a rich dataset is often just multiple partial truths stitched together.

Errors don’t exist at the row level — they exist at the relationship level

Most people review aggregated lists row by row.

That’s the wrong lens.

The real errors live between rows:

The same contact appears twice with different seniority

Two sources disagree on whether a company is SMB or mid-market

A role exists, but the department mapping conflicts across records

One source updates titles monthly, another quarterly

Individually, each record looks usable.

Collectively, they contradict each other.

Outbound systems don’t resolve contradictions—they amplify them.

Aggregation masks age differences between fields

One of the most overlooked problems with aggregated lists is asynchronous aging.

In a blended record:

The email may be recently verified

The job title may be six months old

The department may no longer exist internally

Aggregation presents this as one “complete” contact, but each field carries its own expiration date. Outreach treats the record as current. Inbox providers do not.

This is how campaigns feel inexplicably “off” despite clean-looking lists.

Aggregated lists blur signal origin

When something breaks, teams ask the wrong question:

“Which lead is bad?”

The better question is:

“Which source logic failed?”

Aggregation removes traceability. Once records are merged, it’s nearly impossible to identify which source introduced which flaw. That makes improvement slow and reactive. The list keeps growing, but learning stalls.

This is why many teams repeatedly fix symptoms:

Adjusting copy

Tweaking cadence

Reducing volume

while the underlying list architecture remains unchanged.

Volume hides error density

Aggregation creates a psychological trap: scale bias.

A list with 50,000 records feels safer than one with 5,000. But error density matters more than error count. Aggregated lists often contain more subtle inaccuracies per record because they’ve passed multiple loose filters instead of one strict one.

Errors become statistically invisible until scale exposes them.

At that point, inbox placement, reply rates, and domain trust have already paid the price.

Aggregation works only when error handling is explicit

Blending data isn’t the problem.

Blending without conflict resolution rules is.

Without clear rules for:

Field precedence

Recency weighting

Source reliability

Conflict suppression

aggregation doesn’t improve reliability—it just increases confidence in the wrong direction.

That false confidence is what makes aggregated lists dangerous. They don’t fail loudly. They fail slowly, and they take performance with them.

What This Means in Practice

Aggregated lists don’t fail because fields are empty.

They fail because conflicting truths are allowed to coexist without resolution.

When data sources disagree and no system decides which one wins, outreach loses coherence long before results drop. Campaigns stall not from lack of effort, but from signals pulling in different directions at once.

Outbound becomes reliable when every record tells one consistent story.

When aggregation preserves contradictions instead of resolving them, performance feels unstable—even with clean-looking lists.

Related Post:

Why CRM Cleanliness Determines Whether Outbound Scales

The Hidden CRM Errors That Break Your Entire Funnel

How Dirty CRM Records Create Pipeline Confusion

Why CRM Drift Happens Faster Than Teams Expect

The CRM Hygiene Rules That Protect Your Outbound System

Why Lead Scoring Fails Without Clean Data

The Scoring Indicators That Predict Real Pipeline Movement

How Bad Data Corrupts Lead Prioritization Models

Why Fit Score and Intent Score Must Be Aligned

The Hidden Scoring Errors Most Teams Don’t Notice

Why Metadata Quality Predicts Outbound Success

The Hidden Contact Signals Most Founders Overlook

How Metadata Gaps Create Unpredictable Campaign Behavior

Why Subtle Lead Signals Influence Reply Probability

The Micro-Patterns in Metadata That Reveal Buyer Intent

Why Company Lifecycle Stage Dictates Cold Email Outcomes

The Lifecycle Signals That Reveal Real Buying Readiness

How Early-Stage Companies Respond Differently to Outbound

Why Growth-Stage Accounts Require More Precise Targeting

The Hidden Data Problems Inside Mature Companies

Why Multi-Source Data Blending Beats Single-Source Lists

The Conflicts That Arise When You Merge Multiple Lead Sources

How Cross-Source Validation Improves Data Reliability

Why Data Blending Fails When Metadata Isn’t Aligned

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.