The Hidden RevOps Data Dependencies Embedded in Lead Quality

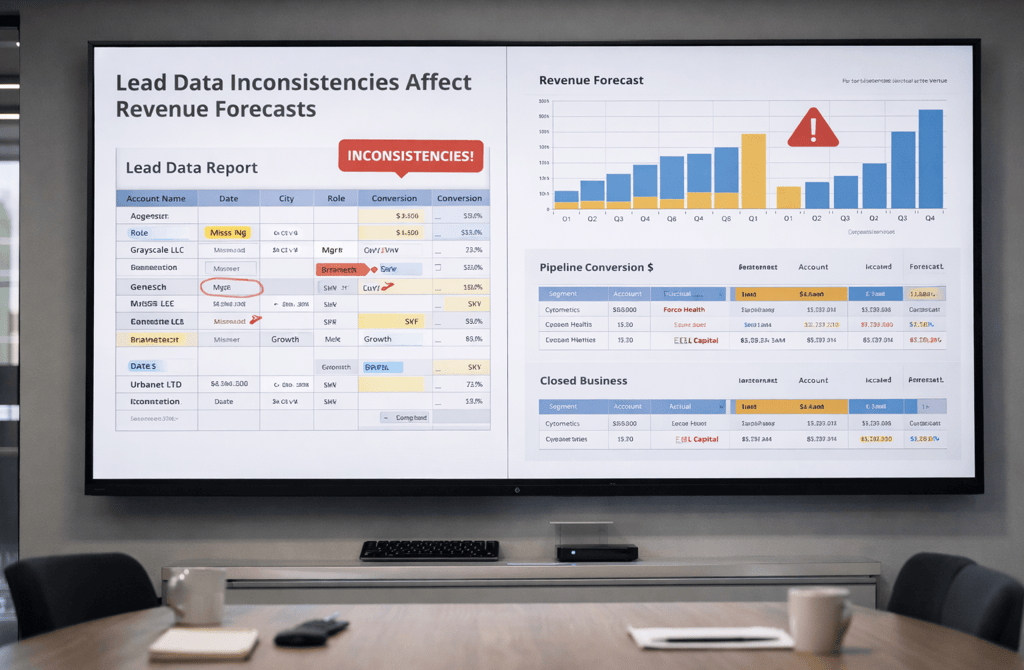

Lead quality issues don’t just affect outreach. They quietly break revenue reporting, forecasting accuracy, and system-level alignment.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/16/20263 min read

Lead quality is usually treated like a front-of-funnel problem. Bad leads mean poor replies. Good leads mean better outreach. Simple.

But inside a RevOps data framework, lead quality is something else entirely. It’s a dependency layer—one that quietly determines whether downstream systems behave logically or start producing contradictions no one can explain.

That’s why teams can “fix” lead quality and still feel like revenue reporting, forecasting, and prioritization are off. They solved the surface issue, not the dependency problem underneath it.

Lead Quality Isn’t a Single Variable in RevOps Systems

In RevOps, lead quality isn’t one field or score. It’s a composite input that feeds multiple systems at once.

A single lead record influences:

routing logic

scoring models

attribution paths

pipeline stage expectations

When lead quality is weak, every one of those systems absorbs distorted inputs. Each system may still function correctly in isolation—but the combined output stops making sense.

This is how RevOps failures start without triggering obvious errors.

Dependencies Multiply as Data Moves Downstream

In a RevOps framework, data doesn’t flow linearly. It branches.

The same lead data might be used to:

assign ownership

calculate expected value

trigger automation

update dashboards

If the original lead data is incomplete, outdated, or loosely defined, each downstream dependency interprets it differently. Small inaccuracies turn into structural inconsistencies.

What looks like a “reporting issue” is often a dependency mismatch that started at the lead level.

Why These Failures Are Hard to Detect

Hidden dependencies don’t break loudly.

There’s no alert when:

a lead is routed correctly but segmented incorrectly

a score updates but attribution doesn’t

a pipeline value reflects assumptions that no longer match reality

Everything still updates. Numbers still move. Dashboards still refresh.

The problem only becomes visible when teams compare outputs across systems and can’t reconcile them. At that point, RevOps is already in reactive mode—explaining discrepancies instead of preventing them.

Lead Quality Shapes System Behavior, Not Just Outcomes

Most teams judge lead quality by outcomes: replies, meetings, conversions.

RevOps frameworks care about something else: system behavior.

High-quality lead data ensures:

routing rules fire consistently

scoring thresholds remain meaningful

segmentation stays stable over time

Low-quality lead data causes systems to drift. Logic still executes, but the assumptions behind that logic slowly become invalid.

This is why RevOps teams often feel like they’re constantly tuning rules that “used to work.” The rules didn’t break. The dependencies feeding them changed.

Frameworks Fail When Dependencies Aren’t Explicit

One of the biggest mistakes in RevOps design is treating lead quality as an isolated upstream concern.

In reality, lead quality is a shared dependency across multiple frameworks:

revenue modeling

forecasting

outbound prioritization

performance analysis

When those dependencies aren’t explicitly defined and protected, each system evolves independently. Over time, alignment degrades—not because teams disagree, but because the data foundation no longer supports shared logic.

This is how RevOps frameworks slowly lose coherence.

Protecting Dependencies Is a Design Problem

Strong RevOps teams don’t just clean data. They design frameworks that assume data will decay and protect critical dependencies accordingly.

That means:

defining which lead fields power which systems

validating those fields continuously

preventing silent fallback logic when data degrades

When dependencies are explicit, lead quality stops being a vague concept and becomes a measurable system requirement.

RevOps frameworks regain predictability not by adding complexity, but by defending the data relationships that keep systems aligned.

What This Means in Practice

Lead quality problems don’t stay at the top of the funnel. Inside RevOps frameworks, they propagate through every system that depends on them.

When lead data is treated as a foundational dependency, RevOps frameworks remain stable and explainable.

When it’s treated as an isolated hygiene task, systems slowly diverge and decisions start contradicting each other.

Predictable revenue systems aren’t built on perfect data—but they do depend on knowing which data relationships must never be allowed to drift.

Related Post:

Why Metadata Quality Predicts Outbound Success

The Hidden Contact Signals Most Founders Overlook

How Metadata Gaps Create Unpredictable Campaign Behavior

Why Subtle Lead Signals Influence Reply Probability

The Micro-Patterns in Metadata That Reveal Buyer Intent

Why Company Lifecycle Stage Dictates Cold Email Outcomes

The Lifecycle Signals That Reveal Real Buying Readiness

How Early-Stage Companies Respond Differently to Outbound

Why Growth-Stage Accounts Require More Precise Targeting

The Hidden Data Problems Inside Mature Companies

Why Multi-Source Data Blending Beats Single-Source Lists

The Conflicts That Arise When You Merge Multiple Lead Sources

How Cross-Source Validation Improves Data Reliability

Why Data Blending Fails When Metadata Isn’t Aligned

The Hidden Errors Inside Aggregated Lead Lists

Why Bad Data Creates Massive Hidden Operational Waste

The Outbound Tasks That Multiply When Data Is Wrong

How Weak Lead Quality Increases SDR Workload

Why Founders Waste Hours Fixing Data Problems

The Operational Drag Caused by Inconsistent Metadata

Why RevOps Fails Without Strong Data Foundations

The RevOps Data Flows That Predict Outbound Success

How Weak Data Breaks RevOps Alignment Across Teams

Why Revenue Models Collapse When Metadata Is Inaccurate

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.