The Quality Gap Between Algorithmic and Human Validation

Accuracy in B2B data isn’t just about passing checks. This article explains the quality gap between algorithmic validation and human judgment.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

2/3/20263 min read

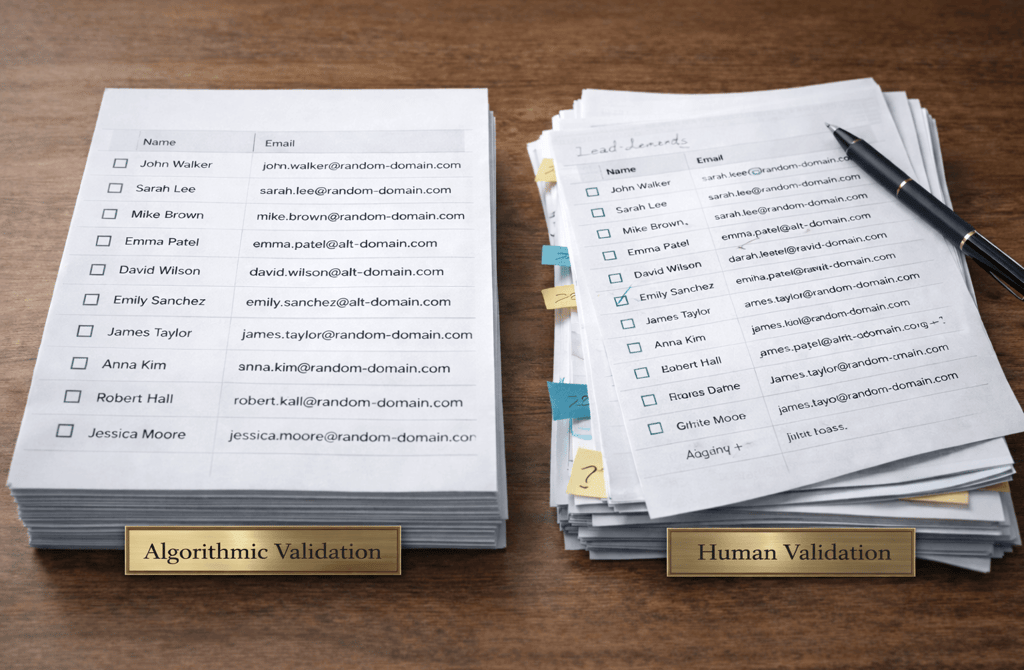

Two datasets can pass the same validation checks and still perform very differently in outbound.

That difference isn’t random, and it isn’t luck. It comes from how “quality” is defined in the first place — and whether validation stops at technical correctness or extends into real-world usability.

This is where algorithmic and human validation quietly diverge.

What Algorithms Mean When They Say “Valid”

Algorithmic validation is designed to answer narrow, well-defined questions:

Does this email resolve?

Does the domain accept messages?

Does the format comply with standards?

These checks are precise and repeatable. That’s their advantage.

But they also define quality in the smallest possible unit: the individual field. If each field passes its rule set, the record is accepted.

From an algorithm’s perspective, consistency equals quality.

From an outbound perspective, that definition is incomplete.

Human Validation Uses a Different Quality Standard

Humans don’t validate records in isolation. They validate them in context.

A human reviewer looks beyond correctness and evaluates:

Whether the role still maps to how decisions are made

Whether the contact fits the intent of the campaign

Whether the record feels usable given how outbound systems behave

This isn’t subjective guesswork. It’s experience applied to system behavior.

Human validation defines quality as fitness for use, not rule compliance.

Why the Same “Valid” Data Produces Different Results

This is the heart of the quality gap.

Algorithmic systems treat all passing records as equal. Human reviewers do not.

Two emails can both be deliverable, yet:

One generates replies

The other trains inbox systems to distrust future sends

Nothing is technically wrong with the second email. It simply doesn’t belong in the same way.

Algorithms approve eligibility. Humans evaluate impact.

Quality Decay Doesn’t Trigger Errors

One reason this gap goes unnoticed is that quality degradation doesn’t look like failure.

Emails still send.

Bounce rates stay acceptable.

Dashboards remain green.

What changes is behavior:

Replies become less consistent

Engagement flattens

More volume is required to maintain the same outcomes

These are quality problems, not validation failures — and algorithmic systems aren’t built to flag them.

Why Algorithms Can’t Close the Gap on Their Own

Algorithms can only enforce the rules they’re given.

They don’t understand:

Buying context

How inbox providers evaluate patterns over time

Human validation operates closer to how outbound is actually judged — by results, not checkmarks.

That’s why automation alone can’t bridge the quality gap, no matter how sophisticated the rules become.

Where Human Validation Has Disproportionate Impact

Human review matters most at leverage points:

Before it’s reused at scale

Before it enters long-running systems

A small improvement in quality here prevents large downstream corrections later. This is where judgment delivers its highest return.

The Real Cost of Ignoring the Gap

Teams that rely entirely on algorithmic validation often respond to declining performance by:

Tweaking copy

Adjusting cadence

Changing infrastructure

Meanwhile, the underlying issue remains untouched.

The data is technically valid — but no longer fit for purpose.

What This Means

Algorithmic validation ensures consistency.

Human validation ensures relevance.

Outbound performance depends on both, but quality is defined by outcomes, not rules.

When human judgment is part of validation, data stays aligned with how outbound systems actually behave.

When it isn’t, the quality gap widens quietly until “clean” data stops producing clean results.

Quality isn’t about passing checks.

It’s about holding up once the system is live.

Related Post:

Why Aged Leads Attract More Spam Filter Scrutiny

The Silent Errors That Occur When Providers Skip Manual Review

How Deep Validation Reveals Problems Basic Checkers Can’t Detect

The Multi-Step Verification Process Behind Reliable Lead Lists

Why Cheap Tools Miss the Most Dangerous Email Types

The Difference Between Syntax Checks and Real Verification

The Bounce Threshold That Signals a System-Level Problem

How Email Infrastructure Breaks When You Use Aged Lists

The Real Reason Bounce Spikes Destroy Send Reputation

Why High-Bounce Industries Need Stricter Data Filters

How Bounce Risk Changes Based on Lead Source Quality

The Drift Timeline That Shows When Lead Lists Lose Accuracy

How Decay Turns High-Quality Leads Into Wasted Volume

Why Job-Role Drift Makes Personalization Completely Wrong

The ICP Errors Caused by Data That Aged in the Background

How Lead Aging Creates False Confidence in Your Pipeline

The Data Gaps That Cause Personalization to Miss the Mark

How Missing Titles and Departments Distort Your ICP Fit

Why Incomplete Firmographic Data Leads to Wrong-Account Targeting

The Enrichment Signals That Predict Stronger Reply Rates

How Better Data Completeness Improves Email Relevance

The Subtle Signals Automation Fails to Interpret

Why Human Oversight Is Essential for Accurate B2B Data

How Automated Tools Miss High-Risk Email Patterns

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.