Why Cheap Tools Miss the Most Dangerous Email Types

Cheap email validation tools catch obvious issues but miss high-risk address types that quietly damage deliverability, targeting accuracy, and outbound performance.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/30/20263 min read

The most dangerous emails are rarely the ones that fail checks.

They’re the ones that pass.

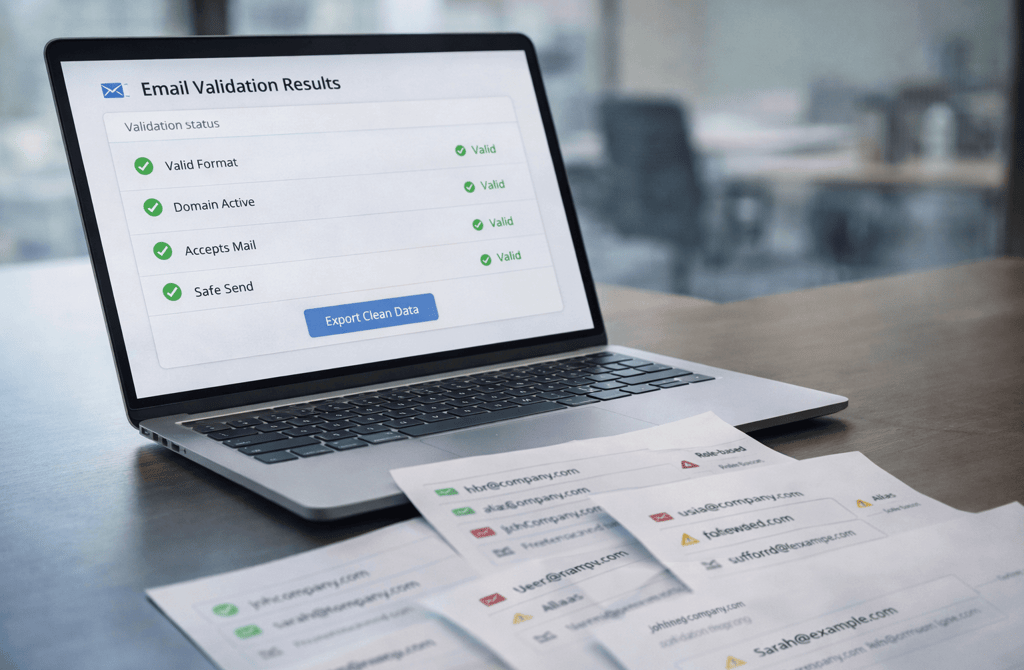

Cheap validation tools are optimized to catch clear violations: invalid syntax, dead domains, obvious bounces. When those checks pass, the email is labeled safe and pushed forward. The problem is that modern deliverability failures are no longer driven by obvious errors — they’re driven by misclassified risk.

And misclassification is where shallow tools struggle most.

The Shift from Invalid to Unstable

Years ago, email risk was binary. An address either existed or it didn’t.

Today, the riskiest emails technically exist, accept mail, and behave normally at first. They don’t bounce. They don’t trigger immediate errors. They sit in a gray zone where infrastructure tolerates them — until patterns emerge.

Cheap tools are built for the old model. They answer the question “Can this email receive mail?”

They don’t answer “Should this email be used for outbound?”

That gap is where damage accumulates.

The Email Types That Slip Through First

Shallow validation tends to miss emails that are structurally valid but operationally dangerous, such as:

Role-based addresses that technically exist but attract negative engagement

Aliases that forward unpredictably inside large organizations

Catch-all domains that mask inactive or unmonitored inboxes

Shared inboxes that distort engagement signals

None of these fail basic checks. In fact, many of them improve superficial metrics early on.

That’s what makes them dangerous.

Why These Emails Hurt Without Bouncing

The real impact isn’t bounce rate — it’s signal corruption.

When risky emails are included:

Opens don’t correlate with interest

Replies drop without obvious cause

Spam complaints rise subtly, not immediately

Inbox placement degrades unevenly across segments

From the outside, it looks like normal variance. Internally, reputation systems are learning the wrong lessons from your sends.

Cheap tools don’t flag these emails because nothing is technically wrong. The risk is behavioral, not mechanical.

The Cost Bias That Shapes Tool Design

Low-cost tools are built to minimize false positives.

They’d rather mark a risky email as safe than accidentally block a usable one. This makes sense for volume-driven use cases, but it creates blind spots for outbound teams that care about downstream behavior.

The result is a consistent pattern:

The most obvious bad emails are removed

The most harmful ones remain

By the time teams notice, the damage is already diffused across campaigns and domains.

Why More Rules Don’t Fix the Problem

Adding stricter filters doesn’t solve misclassification.

You can tighten syntax checks. Add more domain rules. Increase rejection thresholds. But the riskiest emails don’t violate rules — they exploit their limits.

What’s missing isn’t enforcement. It’s interpretation:

How this email behaves relative to others

How similar emails have performed historically

How engagement patterns cluster over time

Those insights don’t come from single-pass validation.

Where Real Protection Comes From

Catching dangerous emails requires looking at them in context:

Relative behavior inside a segment

Consistency across similar domains or roles

How engagement patterns evolve, not just whether they exist

This is why cheap tools appear to work — until they’re used repeatedly under real outbound conditions.

They’re not failing at detection.

They’re failing at risk classification.

What This Changes in Practice

The emails that damage outbound rarely announce themselves.

They pass checks. They send fine. They look normal in dashboards. And they quietly train inbox providers to distrust future sends.

Avoiding that outcome isn’t about spending more for the sake of it. It’s about recognizing that validation isn’t just about filtering errors — it’s about identifying instability before it compounds.

The most dangerous email types aren’t the ones that bounce.

They’re the ones that look safe long enough to do real damage.

Related Post:

How Vertical Dynamics Shape Data Stability Over Time

Why Certain Industries Generate More Role Ambiguity

How LinkedIn Data Stays “Fresh” Longer Than Email Data

Why Phone Numbers Age Faster in Certain Industries

The Channel Fit Signals That Predict Reply Probability

How Email Bounce Risk Doesn’t Translate to LinkedIn

Why LinkedIn Titles Matter More Than Email Metadata

How Regulatory Environments Influence Data Quality

Why Global Lead Lists Require Region-Specific Handling

The International Data Signals That Predict Reliability

How Country-Level Mobility Impacts Role Accuracy

Why Global Data Drifts Faster in Emerging Markets

How Market Competition Influences Lead Pricing

Why Industry Complexity Drives Lead Cost Variation

The Cross-Industry Factors That Predict Lead Price

How Data Difficulty Impacts Lead Cost Across Verticals

Why Some Sectors Offer Better Lead Value Than Others

Why Outbound Falls Apart When Lead Lists Age Faster Than Your Campaigns

The Real Reason Fresh Data Makes Your Outreach Feel Easier

How a 90-Day Recency Window Changes Your Entire Cold Email Strategy

The Hidden Costs of Emailing Contacts Who Haven’t Been Validated Recently

Why Aged Leads Attract More Spam Filter Scrutiny

The Silent Errors That Occur When Providers Skip Manual Review

How Deep Validation Reveals Problems Basic Checkers Can’t Detect

The Multi-Step Verification Process Behind Reliable Lead Lists

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.