The Subtle Signals Automation Fails to Interpret

Automation catches patterns — but misses context. This article breaks down the subtle data signals only human judgment can interpret, and why relying on automation alone creates hidden outbound risk.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

2/3/20263 min read

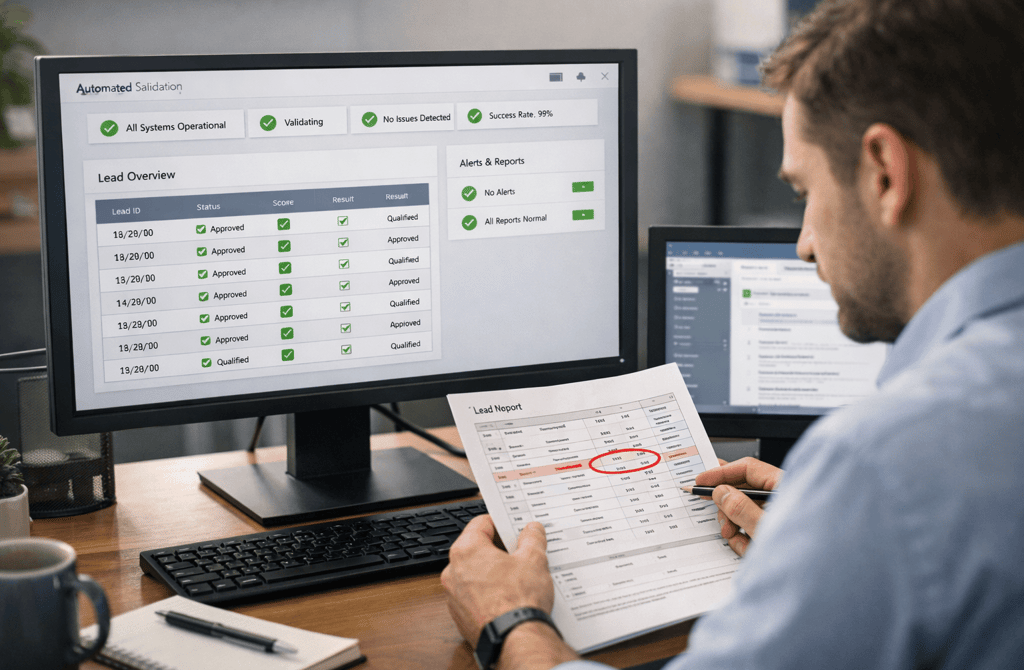

Automation is excellent at confirming what already looks correct.

Where it fails is in recognizing when something technically valid is quietly becoming operationally dangerous.

Most outbound systems don’t collapse because automation breaks. They fail because automation keeps approving data that no longer behaves the way the system assumes it does.

That gap — between correctness and context — is where risk accumulates.

When “Passing” Data Isn’t Actually Safe

Modern validation tools are built to answer binary questions:

Does the email exist?

Does the domain accept mail?

Does the format look correct?

Those checks are necessary. They are also incomplete.

What automation cannot reliably interpret are behavioral contradictions — signals that only make sense when multiple fields are considered together. An email can pass syntax, domain, and SMTP checks while still being the wrong contact for outreach.

For example:

A role title that technically exists, but no longer maps to buying authority

A domain that accepts mail but recently changed internal routing

A contact that hasn’t bounced yet — but no longer belongs in the department being targeted

Automation sees “valid.” Humans see misalignment.

Context Is Not a Field Automation Can Validate

Most automated systems evaluate fields independently. Humans evaluate relationships between fields.

Automation struggles when:

Seniority signals don’t match department structure

Contact roles appear correct in isolation but fail in real-world workflows

These are not edge cases. They are common failure points in scaling outbound.

A validation tool can confirm that an address exists. It cannot confirm whether the person behind that address is still the right person to email — or whether sending to them reinforces negative engagement signals.

That distinction matters more than most teams realize.

Why Automation Misses Early Warning Signals

Automation is reactive by design. It responds to explicit failures like hard bounces or syntax errors. Humans detect trend anomalies before failure occurs.

Examples of subtle signals automation often misses:

Titles that remain unchanged while responsibilities shift

Departments that still exist on paper but no longer control buying decisions

Domains that technically pass validation but show abnormal response behavior across campaigns

These signals don’t trigger alerts. They require interpretation.

By the time automation flags a problem, the damage is often already visible in:

Reply rate decay

Rising soft bounces

Domain reputation pressure

Human review operates earlier in the failure curve.

The Cost of Trusting Automation Alone

Teams that rely exclusively on automated validation often misdiagnose performance issues.

When replies drop, they adjust copy.

When deliverability weakens, they adjust infrastructure.

When bounce rates creep up, they blame volume.

But the root issue is frequently data interpretation, not data existence.

Automation confirms eligibility. Humans confirm suitability.

Outbound systems that skip human review tend to accumulate:

Role drift that breaks personalization logic

Gradual reputation erosion without obvious triggers

None of this shows up in a single dashboard.

Human Judgment Is a Control Layer, Not a Bottleneck

Human review isn’t about rechecking everything automation already did. It’s about evaluating what automation cannot reason about.

Strong systems use humans to:

Resolve conflicts between fields

Identify contacts that technically pass but strategically fail

Apply business logic automation cannot infer

This isn’t slower. It’s preventative.

A small amount of human oversight upstream prevents large-scale correction downstream — especially once volume increases.

Where This Matters Most

The larger and more complex the outbound system becomes, the more dangerous silent misalignment gets.

Automation scales checks.

Humans protect intent.

The most reliable outbound programs treat human judgment as a safety layer, not a backup plan. Automation handles volume. Humans preserve accuracy.

What This Means

Automation is excellent at confirming what exists.

It is not designed to understand what no longer fits.

Outbound fails quietly when teams confuse technical validity with strategic readiness. The difference is subtle — and expensive.

When data stays aligned with real roles, real departments, and real buying conditions, outreach remains predictable.

When automation approves data without human interpretation, systems drift until performance breaks without warning.

Clean, context-aware data keeps outbound stable.

Unchecked data slowly turns automation into a liability instead of a multiplier.

Related Post:

Why Aged Leads Attract More Spam Filter Scrutiny

The Silent Errors That Occur When Providers Skip Manual Review

How Deep Validation Reveals Problems Basic Checkers Can’t Detect

The Multi-Step Verification Process Behind Reliable Lead Lists

Why Cheap Tools Miss the Most Dangerous Email Types

The Difference Between Syntax Checks and Real Verification

The Bounce Threshold That Signals a System-Level Problem

How Email Infrastructure Breaks When You Use Aged Lists

The Real Reason Bounce Spikes Destroy Send Reputation

Why High-Bounce Industries Need Stricter Data Filters

How Bounce Risk Changes Based on Lead Source Quality

The Drift Timeline That Shows When Lead Lists Lose Accuracy

How Decay Turns High-Quality Leads Into Wasted Volume

Why Job-Role Drift Makes Personalization Completely Wrong

The ICP Errors Caused by Data That Aged in the Background

How Lead Aging Creates False Confidence in Your Pipeline

The Data Gaps That Cause Personalization to Miss the Mark

How Missing Titles and Departments Distort Your ICP Fit

Why Incomplete Firmographic Data Leads to Wrong-Account Targeting

The Enrichment Signals That Predict Stronger Reply Rates

How Better Data Completeness Improves Email Relevance

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.