The Difference Between Syntax Checks and Real Verification

Syntax checks confirm formatting, but real verification tests relevance and risk. Learn why valid-looking data can still undermine outbound performance.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/30/20263 min read

Most teams don’t misunderstand lead data.

They mislabel it.

Syntax checks and verification get treated as the same thing because they both return a result that looks conclusive. A green checkmark. A “valid” status. A clean export. Once that label is applied, the data moves forward as if a decision has already been made.

In reality, no decision has happened yet.

Syntax Checks Answer a Structural Question

Syntax checks exist to confirm that an address conforms to technical rules.

Does the email follow the correct format?

Does the domain exist?

Does the server respond?

These checks are narrow by design. They answer whether a message can be delivered, not whether it should be sent.

At this stage, the data hasn’t been evaluated — it’s only been formatted.

Verification Solves a Different Problem Entirely

Verification isn’t an extension of syntax checks. It’s a separate category of work.

Where syntax asks, “Is this address technically reachable?”

Verification asks, “Is this address operationally meaningful?”

That distinction matters because most outbound failures aren’t technical. They’re contextual.

Emails can be valid while being:

Routed to shared or unmanaged inboxes

Misaligned with role responsibility

Outdated but still technically active

Syntax can’t detect any of this. It was never designed to.

How the Category Error Creeps In

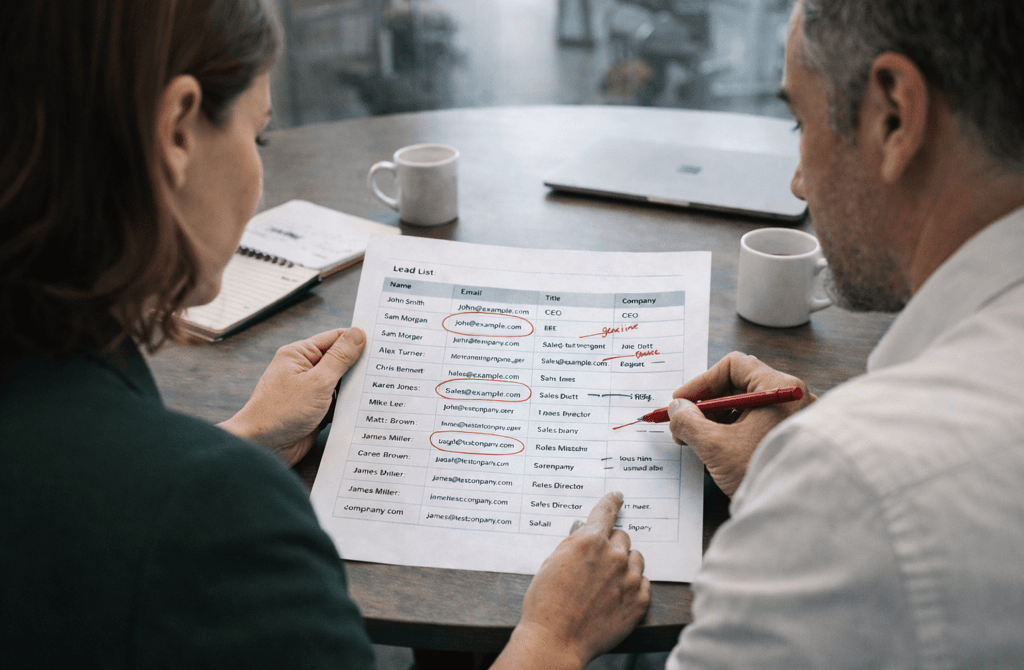

The mistake happens when syntax output is treated as a verdict.

Once a list is labeled “clean,” teams stop questioning it. The data is assumed correct, and any performance issues are attributed downstream — to copy, timing, or channel selection.

This creates a blind spot:

the data is never evaluated for intent, relevance, or risk because it already “passed.”

The category error isn’t trusting automation.

It’s trusting automation to answer a question it was never built to ask.

Why This Confusion Persists

Syntax checks produce certainty. Verification produces judgment.

Certainty is easy to scale, easy to sell, and easy to explain. Judgment is slower, contextual, and harder to summarize in a dashboard.

As a result, teams optimize around what’s measurable instead of what’s meaningful. They assume that if data clears a technical bar, it must be usable.

That assumption holds at small volumes.

It breaks the moment repetition enters the system.

What Real Verification Actually Evaluates

Verification doesn’t look at fields in isolation. It looks at fit.

Fit between:

Role and function

Contact and company behavior

Data freshness and usage context

These aren’t binary checks. They’re interpretive decisions.

That’s why verification feels less definitive — but produces fewer surprises later.

The Practical Consequence of Mixing the Two

When syntax checks are mistaken for verification, teams end up compensating instead of correcting.

They:

Rewrite messaging to overcome weak relevance

Increase volume to offset low intent

Add steps to sequences to “warm up” cold targets

Accept uneven performance as normal variance

All of that effort exists to work around a labeling mistake made at the start.

Where the Line Should Be Drawn

Syntax checks decide whether data is processable.

Verification decides whether data is usable.

When those two decisions are collapsed into one, outbound becomes harder to reason about. When they’re separated, performance becomes easier to diagnose — even when results aren’t perfect.

What This Changes

A list that passes syntax isn’t clean.

It’s simply formatted.

Treating it as finished pushes the real work downstream, where errors are harder to trace and more expensive to fix.

Syntax makes data acceptable to machines.

Verification makes data acceptable to strategy.

Confusing the two doesn’t break outbound immediately — it just ensures that problems surface later, when they’re harder to explain.

Related Post:

How Vertical Dynamics Shape Data Stability Over Time

Why Certain Industries Generate More Role Ambiguity

How LinkedIn Data Stays “Fresh” Longer Than Email Data

Why Phone Numbers Age Faster in Certain Industries

The Channel Fit Signals That Predict Reply Probability

How Email Bounce Risk Doesn’t Translate to LinkedIn

Why LinkedIn Titles Matter More Than Email Metadata

How Regulatory Environments Influence Data Quality

Why Global Lead Lists Require Region-Specific Handling

The International Data Signals That Predict Reliability

How Country-Level Mobility Impacts Role Accuracy

Why Global Data Drifts Faster in Emerging Markets

How Market Competition Influences Lead Pricing

Why Industry Complexity Drives Lead Cost Variation

The Cross-Industry Factors That Predict Lead Price

How Data Difficulty Impacts Lead Cost Across Verticals

Why Some Sectors Offer Better Lead Value Than Others

Why Outbound Falls Apart When Lead Lists Age Faster Than Your Campaigns

The Real Reason Fresh Data Makes Your Outreach Feel Easier

How a 90-Day Recency Window Changes Your Entire Cold Email Strategy

The Hidden Costs of Emailing Contacts Who Haven’t Been Validated Recently

Why Aged Leads Attract More Spam Filter Scrutiny

The Silent Errors That Occur When Providers Skip Manual Review

How Deep Validation Reveals Problems Basic Checkers Can’t Detect

The Multi-Step Verification Process Behind Reliable Lead Lists

Why Cheap Tools Miss the Most Dangerous Email Types

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.