How Automated Tools Miss High-Risk Email Patterns

Email validation tools often approve messages in isolation. This article explains how automated systems miss high-risk email patterns that quietly undermine outbound.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

2/3/20263 min read

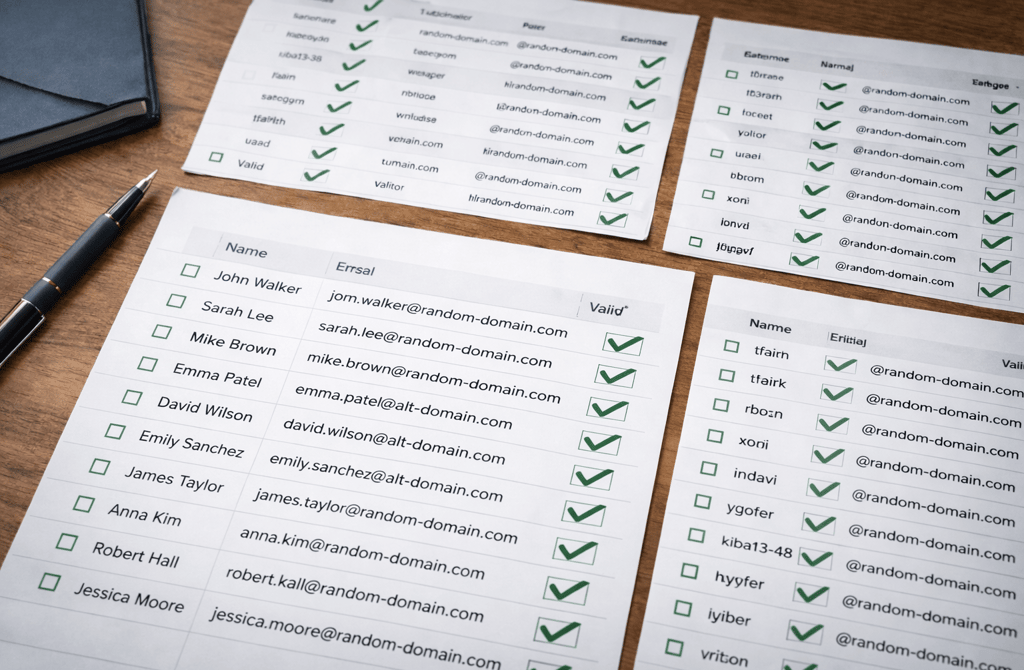

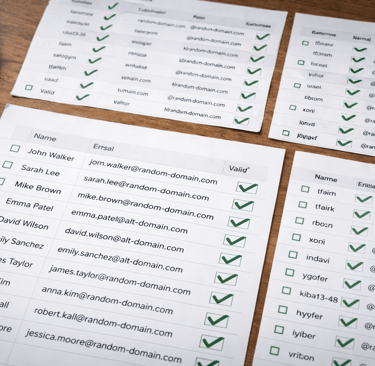

Most validation systems answer the wrong question.

They ask whether each email is acceptable on its own, not whether the list is safe as a whole. That difference is subtle — and it’s where high-risk email patterns slip through unnoticed.

Nothing looks broken. Everything passes. And yet performance quietly degrades.

Validation Tools Think in Rows, Not Shapes

Automated tools are built to evaluate records independently. One email, one verdict. Pass or fail.

That design works for catching obvious problems:

Invalid syntax

Dead domains

But risky email behavior rarely lives at the single-record level. It emerges across the list.

Patterns form when:

Multiple emails share similar structures

Domains repeat in unnatural clusters

Usernames follow predictable sequences

Roles align too cleanly across unrelated companies

Each record passes. The pattern fails.

Automation doesn’t see patterns unless it’s explicitly trained to look for them — and most validation systems aren’t.

Why Pattern Risk Is Invisible to Automated Checks

Automation assumes independence. Humans instinctively look for correlation.

High-risk email patterns often include:

Reused naming conventions across different domains

Identical role-based formats appearing too frequently

None of these violate technical rules. They violate statistical expectations.

Inbox providers don’t judge emails in isolation. They evaluate distribution behavior over time. When patterns repeat unnaturally, trust erodes — even if no single email is invalid.

Automation validates correctness. Providers infer intent.

Passing Validation Is Not the Same as Being Safe

This is where teams get misled.

A list can show:

Low immediate bounce rates

Clean syntax results

“Verified” labels across the board

And still be risky.

Why? Because risky patterns don’t trigger errors — they trigger suspicion. Spam filters and inbox systems are designed to recognize repetition, similarity, and unnatural uniformity.

Automation says: “Nothing failed.”

Inbox systems ask: “Why does this look manufactured?”

Those are very different evaluations.

How Pattern Risk Damages Outbound Over Time

Pattern-level issues rarely cause instant failure. They cause slow degradation.

Common symptoms include:

Reply rates declining without obvious cause

Soft bounces increasing gradually

Domains requiring more warmup effort than expected

Engagement flattening despite stable copy and targeting

Teams often respond by adjusting messaging or cadence, unaware that the underlying list structure is training inbox providers to distrust future sends.

The data isn’t wrong. The shape of the data is.

Why Automation Can’t Self-Correct This

Automated systems are reactive. They improve when failure is explicit.

Pattern risk doesn’t fail loudly. It accumulates quietly.

Unless a system is explicitly designed to:

Compare records against each other

Track repetition thresholds

Flag unnatural clustering

…it will continue approving lists that technically comply but strategically degrade performance.

Most validation tools weren’t built for this layer of judgment. They were built to scale checks, not interpret behavior.

Where Human Review Changes the Outcome

Humans naturally detect patterns before they become problems.

A reviewer doesn’t just see “approved.” They notice:

Too many similar formats

Unnatural consistency across sources

Lists that feel generated rather than discovered

This isn’t intuition — it’s experience applied to context.

Human review doesn’t replace automation. It complements it by evaluating what automation cannot reason about: collective behavior.

The Real Risk Is Overconfidence

The most dangerous lists are the ones that look perfect.

When everything passes, teams stop questioning. That’s when pattern risk has the easiest path into live campaigns.

High-performing outbound systems assume:

Approval requires contextual judgment

Safety is determined by behavior, not labels

That mindset is what keeps performance stable as volume grows.

What This Means

Automated tools are excellent at verifying individual emails.

They are not designed to judge how lists behave collectively.

Outbound fails slowly when teams trust approval signals without questioning patterns. By the time the damage is visible, correction is expensive.

When lists are reviewed for structure, repetition, and distribution, outbound remains predictable.

When pattern risk goes unchecked, validation becomes a false sense of security.

Clean data isn’t just valid — it behaves naturally.

And behavior is something automation still struggles to understand.

Related Post:

Why Aged Leads Attract More Spam Filter Scrutiny

The Silent Errors That Occur When Providers Skip Manual Review

How Deep Validation Reveals Problems Basic Checkers Can’t Detect

The Multi-Step Verification Process Behind Reliable Lead Lists

Why Cheap Tools Miss the Most Dangerous Email Types

The Difference Between Syntax Checks and Real Verification

The Bounce Threshold That Signals a System-Level Problem

How Email Infrastructure Breaks When You Use Aged Lists

The Real Reason Bounce Spikes Destroy Send Reputation

Why High-Bounce Industries Need Stricter Data Filters

How Bounce Risk Changes Based on Lead Source Quality

The Drift Timeline That Shows When Lead Lists Lose Accuracy

How Decay Turns High-Quality Leads Into Wasted Volume

Why Job-Role Drift Makes Personalization Completely Wrong

The ICP Errors Caused by Data That Aged in the Background

How Lead Aging Creates False Confidence in Your Pipeline

The Data Gaps That Cause Personalization to Miss the Mark

How Missing Titles and Departments Distort Your ICP Fit

Why Incomplete Firmographic Data Leads to Wrong-Account Targeting

The Enrichment Signals That Predict Stronger Reply Rates

How Better Data Completeness Improves Email Relevance

The Subtle Signals Automation Fails to Interpret

Why Human Oversight Is Essential for Accurate B2B Data

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.