How AI Detects Drift Patterns Before Humans Notice

AI spots subtle data drift long before metrics break. Learn how pattern shifts reveal lead quality decay before humans can see it.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/24/20263 min read

Drift doesn’t arrive as a break.

It arrives as a shift.

Nothing looks wrong at first. Campaigns still send. Replies still come in. Metrics hover inside acceptable ranges. The problem is that by the time humans agree something feels off, the underlying change has already settled in.

AI detects drift earlier because it doesn’t wait for failure. It watches movement.

Why humans notice drift late by default

Human review is anchored to thresholds.

Teams react when:

reply rates fall below expectations

bounce rates cross a visible line

segments stop converting the way they used to

This works for catching breakdowns. It’s not built for spotting directional change.

Humans are good at recognizing states (“working” vs “not working”).

Drift happens in transitions — where nothing is broken yet.

Drift is not decay — it’s misalignment in motion

Data decay implies age.

Drift implies change.

Examples:

job titles evolving faster than enrichment updates

domains shifting usage patterns

role seniority flattening across company sizes

Each change is small. Together, they alter how data behaves — even though individual fields still look acceptable.

AI models are designed to track these relationships continuously.

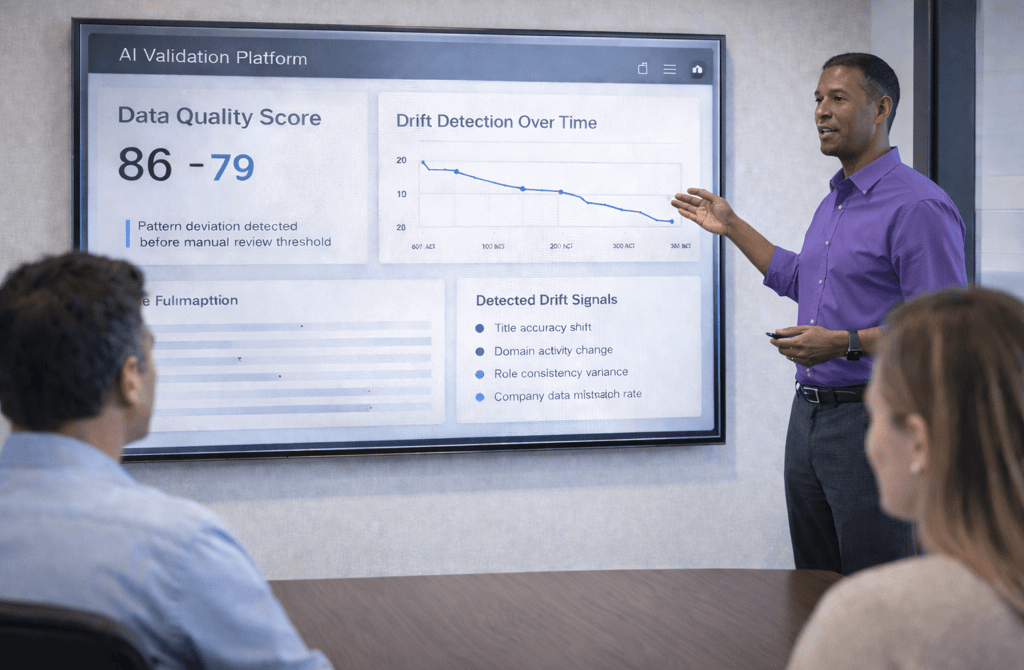

What AI sees that dashboards don’t

Traditional dashboards show snapshots:

averages

totals

deltas

AI looks at shape.

It detects:

slope changes instead of drops

variance expansion instead of outright errors

timing shifts instead of volume loss

A reply rate that stays flat but takes longer to arrive is a signal.

A title distribution that widens slightly over time is a signal.

A domain that responds differently at the same volume is a signal.

None of these trip alarms on their own. Together, they indicate drift.

Pattern comparison beats absolute measurement

Humans often ask: Is this number good or bad?

AI asks: Is this number behaving the way it used to?

By comparing:

current patterns vs historical baselines

segment behavior vs peer groups

recent distributions vs long-term norms

AI flags divergence before performance degrades.

This isn’t foresight. It’s pattern memory at scale.

Why early drift rarely feels urgent

Drift doesn’t hurt immediately.

Early symptoms tend to be:

slightly lower relevance

longer sales cycles

more follow-ups required

marginal engagement decay

These are easy to rationalize:

“Market’s quieter.”

“Messaging needs a tweak.”

By the time those explanations stop working, the drift has already compounded.

AI doesn’t rationalize. It compares.

The role of confidence bands in drift detection

One of the biggest differences between human and AI detection is tolerance.

Humans accept variance until it becomes uncomfortable.

AI defines acceptable movement ranges and watches for boundary pressure.

When behavior repeatedly touches the edge of those ranges — even without crossing them — AI treats it as a warning.

That’s why drift alerts often feel early or conservative. They’re based on trajectory, not outcome.

Why drift matters before campaigns fail

Drift changes the meaning of data before it breaks the system.

If titles drift, personalization loses relevance.

If domains drift, deliverability assumptions weaken.

If role behavior drifts, ICP definitions blur.

None of these cause instant failure. They quietly reduce signal clarity — which makes every downstream decision noisier.

AI detects drift early because noise shows up in patterns long before it shows up in results.

Humans still matter — just not at detection time

AI is not better at deciding what to do.

It’s better at deciding when attention is required.

Humans are needed to:

interpret why drift is happening

decide whether it’s temporary or structural

adjust targeting, messaging, or sourcing accordingly

The advantage comes from letting AI surface the change while options are still open.

What this changes operationally

Teams that rely on human detection tend to respond after impact.

Teams that monitor drift respond while behavior is still flexible.

Early drift visibility allows:

targeted refresh instead of full replacement

segmentation adjustments instead of volume increases

messaging refinement instead of system overhaul

The difference isn’t speed.

It’s intervention timing.

Data doesn’t fail all at once. It slides.

Systems that watch for movement — not just breakage — see it first.

Related Post:

The Chain Reactions Triggered by Weak Data Inputs

How One Bad Field Corrupts an Entire Outbound System

Why Data Dependencies Matter More Than Individual Signals

The Upstream Errors That Create Downstream Pipeline Damage

Why Some Industries Naturally Produce Higher Bounce Rates

The Vertical Patterns Behind High-Bounce Lead Lists

How Industry Type Predicts Email Bounce Probability

Why Low-Bounce Verticals Offer More Stable Outreach

The Structural Reasons Certain Verticals Bounce More

Why Outbound Behavior Differs Wildly Across Verticals

The Industry-Level Reply Patterns Most Teams Miss

How Vertical Dynamics Shape Cold Email Engagement

Why Some Industries Respond Faster Than Others

The Vertical Factors Behind High-Intent Replies

Why Some Industries Experience Lightning-Fast Data Decay

The Vertical Decay Speed Patterns Most Teams Never Measure

How Industry Turnover Dictates Data Decay Velocity

Why High-Pace Markets Produce Faster-Expiring Lead Data

The Decay-Speed Differences Between Tech and Traditional Verticals

The AI Signal Patterns That Predict Lead Reliability

How Machine Learning Improves Multi-Field Enrichment

Why AI-Assisted Verification Outperforms Manual Checks Alone

The Hidden Biases AI Introduces When Data Is Weak

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.