The AI Signal Patterns That Predict Lead Reliability

AI doesn’t guess lead quality. It reads patterns. Learn which AI signals actually predict B2B lead reliability before you ever send an email.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

1/24/20263 min read

Most teams assume AI judges leads the same way humans do — by checking if an email “looks valid.”

It doesn’t.

Modern AI systems don’t ask “Can this email be sent?”

They ask “Should this contact behave like a real buyer?”

That difference is why some lists feel solid before the first send, while others quietly unravel mid-campaign.

Lead reliability isn’t a single flag. It’s a pattern profile.

What Lead Reliability Actually Means (Beyond Validity)

A lead can be technically valid and still unreliable.

Reliability answers a different question:

Will this contact behave predictably once outreach begins?

Unreliable leads introduce volatility:

inconsistent opens

delayed bounces

silent spam filtering

misleading engagement signals

AI models trained on outbound outcomes learn to spot these risks early — not by guessing intent, but by reading signal alignment.

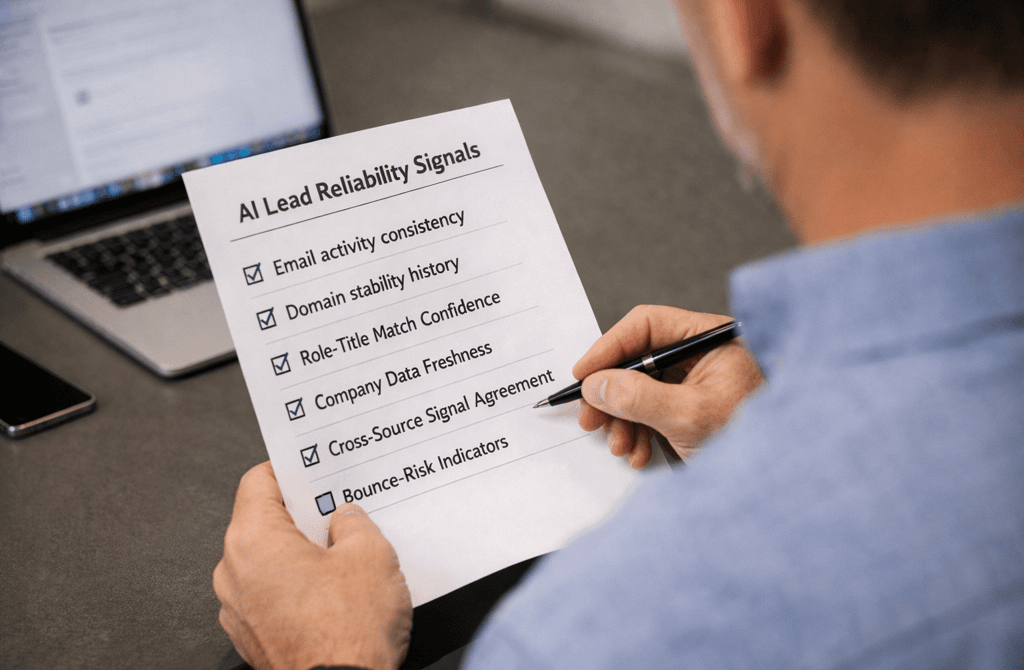

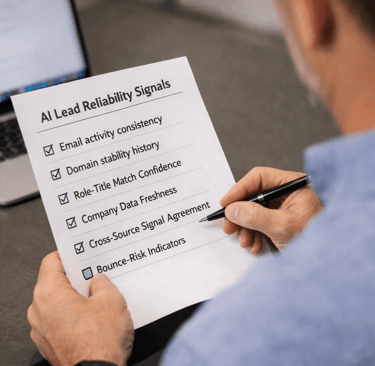

Signal Pattern #1: Activity Consistency Across Time

AI pays close attention to whether contact behavior follows stable patterns.

Reliable leads tend to show:

consistent email activity windows

stable domain response behavior

predictable engagement decay curves

Erratic signals — long inactivity followed by brief spikes — often correlate with recycled or abandoned inboxes.

Consistency matters more than raw activity.

Signal Pattern #2: Domain Stability History

Domains carry memory.

AI systems evaluate:

domain age and continuity

historical deliverability behavior

sudden infrastructure changes

Leads tied to domains with frequent resets, migrations, or ownership changes show higher failure rates — even when emails technically pass validation.

Stability lowers uncertainty.

Signal Pattern #3: Role–Title Confidence

AI models are highly sensitive to semantic mismatch.

They measure:

whether the role matches industry norms

how often similar titles reply in past campaigns

A “Head of Operations” at a five-person firm behaves very differently than the same title at a 500-person company.

When titles don’t fit context, reply probability drops sharply.

Signal Pattern #4: Company Data Freshness

AI treats freshness as a multiplier.

Outdated company records often trigger:

misaligned messaging

poor segmentation signals

false negatives in intent detection

Fresh data doesn’t just reduce bounces — it improves model confidence.

When company attributes reflect current reality, predictive accuracy rises across the entire sequence.

Signal Pattern #5: Cross-Source Agreement

One of the strongest predictors of reliability is signal convergence.

AI looks for agreement across:

company size indicators

role descriptions

industry classification

activity timelines

When multiple sources independently point to the same profile, reliability increases.

When signals conflict, models downgrade confidence — even if no single field looks “wrong.”

Signal Pattern #6: Bounce-Risk Indicators

Bounce risk isn’t binary.

AI evaluates:

historical soft-bounce patterns

inbox provider behavior

delivery latency anomalies

Some leads don’t bounce immediately — they fail quietly after warming phases or volume increases.

These delayed failures are among the most damaging, and AI flags them early through subtle delivery signals.

Why Patterns Matter More Than Individual Fields

Single data points lie.

Patterns don’t.

AI doesn’t trust:

one clean email

one accurate title

one recent update

It trusts alignment over time.

When signals reinforce each other, reliability becomes predictable.

When they conflict, campaigns feel random — even with good copy.

What This Means for Outbound Teams

If outreach feels inconsistent, the issue often isn’t messaging.

It’s that:

leads were validated, but not aligned

fields were filled, but not coherent

freshness existed in isolation, not system-wide

Reliable lead data reduces decision noise — for both humans and machines.

Bottom Line

AI doesn’t reward lists that look clean.

It rewards lists that behave consistently.

When signal patterns align — across roles, domains, freshness, and history — outbound stops feeling fragile and starts feeling repeatable.

Predictable outreach doesn’t come from smarter templates.

It comes from data that holds up once the system starts paying attention.

Related Post:

The Chain Reactions Triggered by Weak Data Inputs

How One Bad Field Corrupts an Entire Outbound System

Why Data Dependencies Matter More Than Individual Signals

The Upstream Errors That Create Downstream Pipeline Damage

Why Some Industries Naturally Produce Higher Bounce Rates

The Vertical Patterns Behind High-Bounce Lead Lists

How Industry Type Predicts Email Bounce Probability

Why Low-Bounce Verticals Offer More Stable Outreach

The Structural Reasons Certain Verticals Bounce More

Why Outbound Behavior Differs Wildly Across Verticals

The Industry-Level Reply Patterns Most Teams Miss

How Vertical Dynamics Shape Cold Email Engagement

Why Some Industries Respond Faster Than Others

The Vertical Factors Behind High-Intent Replies

Why Some Industries Experience Lightning-Fast Data Decay

The Vertical Decay Speed Patterns Most Teams Never Measure

How Industry Turnover Dictates Data Decay Velocity

Why High-Pace Markets Produce Faster-Expiring Lead Data

The Decay-Speed Differences Between Tech and Traditional Verticals

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.