How Manual Validation Fixes What Automation Misreads

Automation validates fields, but manual review corrects misread roles, context, and risk. Here’s how human validation fixes what machines miss.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

12/26/20253 min read

Automation is excellent at processing rules.

Manual validation is excellent at understanding reality.

That difference matters more than most teams realize.

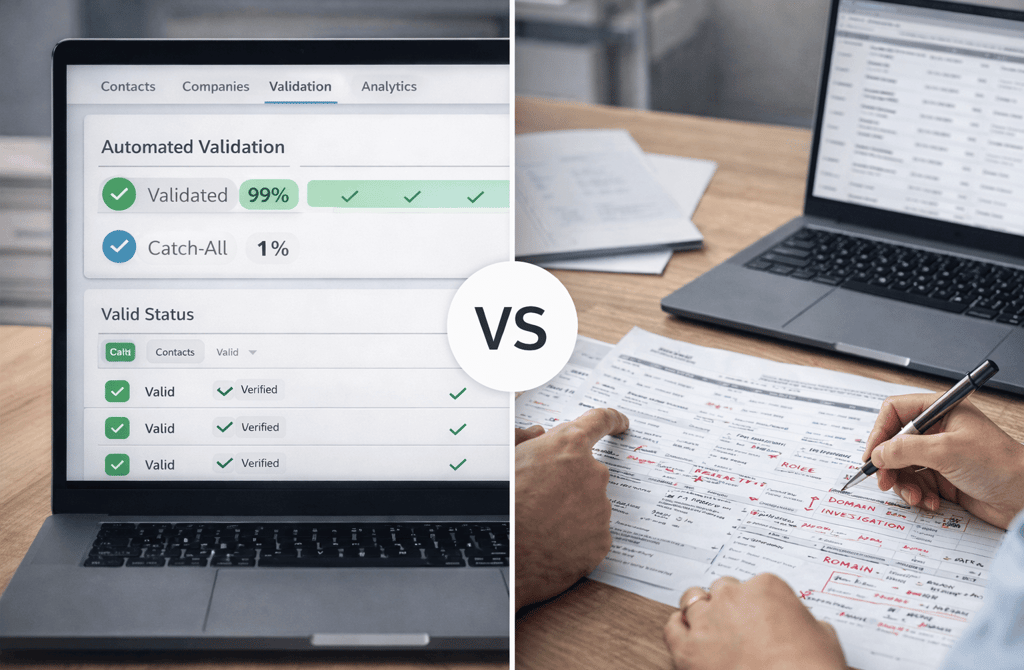

Automated validation tools don’t usually fail because they’re broken. They fail because they’re literal. They treat lead data as a set of independent fields rather than a living, contextual record shaped by human behavior, company structure, and market movement. When those signals conflict, automation follows rules. Humans resolve meaning.

That gap is where misreads happen — and why manual validation still plays a critical role in modern outbound.

Automation Reads Fields. Humans Read Situations.

An automated system can confirm that an email address exists, the domain is live, and the syntax passes. What it cannot reliably determine is whether the context behind that email still makes sense.

For example:

A contact may technically exist, but now sits in a role with zero buying authority.

A department name may still match, but the function has shifted after a reorg.

A company may be active, but the specific division tied to your offer no longer operates.

Automation sees green lights across individual fields.

Human validators see a mismatch in the overall story.

Manual validation connects dots between title, department, company behavior, and role relevance — something rules-based systems struggle to do consistently.

Where Automation Misreads Intent

One of the most dangerous automation failures isn’t invalid emails — it’s false confidence.

Automated systems often classify leads as “safe” or “verified” based on technical deliverability checks. But deliverability is not the same as relevance or intent. A lead can be perfectly deliverable and still be a dead end.

Manual validation catches signals like:

Titles that are technically correct but functionally misleading

Contacts listed under generic leadership labels with no real buying scope

Companies where decision-making has moved away from the listed role entirely

Automation validates access.

Humans validate opportunity.

Without that layer, teams end up sending clean emails to the wrong people — and misdiagnosing low replies as a copy or timing problem.

Conflict Resolution Is a Human Skill

Modern lead data is rarely clean across all sources. Conflicting information is normal:

Different titles across platforms

Multiple departments tied to one role

Revenue or size mismatches after rapid growth or contraction

Automation often resolves these conflicts by prioritizing one source or averaging signals. Manual validation resolves them by judgment.

A human can recognize when:

One source is outdated but another reflects recent movement

A title change indicates a promotion that increases buying influence

A company restructuring changes who actually owns the decision

This kind of conflict resolution isn’t about accuracy in isolation — it’s about making the right outbound decision.

Manual Validation Prevents Silent Campaign Damage

The most costly automation misreads don’t show up as bounces. They show up as:

Flat reply rates

Low-quality responses

Inconsistent engagement patterns

Campaigns that look “technically fine” but never convert

Manual validation reduces this silent damage by filtering out leads that pass technical checks but fail strategic ones. It protects campaigns from wasting volume on contacts that will never respond meaningfully — even if they technically could.

That protection becomes more important as sending volume increases. Automation scales mistakes just as efficiently as it scales successes. Manual review acts as a pressure release valve before those mistakes propagate.

The Real Role of Manual Validation Today

Manual validation isn’t about replacing automation. It’s about correcting what automation misreads.

Automation should handle:

Speed

Initial filtering

Basic technical checks

Humans should handle:

Context

Role relevance

Conflict resolution

Edge cases that affect real buying behavior

When those roles are clearly defined, outbound becomes more predictable — not because it’s faster, but because it’s smarter.

Final Thought

Automation excels at enforcing rules.

Manual validation excels at interpreting reality.

When outbound relies only on automated checks, campaigns don’t fail loudly — they fail quietly, through misalignment that no dashboard flags. Adding human judgment doesn’t slow systems down; it prevents them from scaling the wrong decisions.

Clean, well-interpreted data creates outbound that behaves consistently and converts with intent.

Misread data, even when technically valid, turns outreach into guesswork no matter how advanced the tools look.

Related Post

Why “Validated” Isn’t Always Valid: The Pitfalls in Modern Data Checks

The Role True Verification Plays in Protecting Domain Reputation

How Weak Validation Layers Inflate Your Deliverability Metrics

Why You Should Never Trust Email Lists Without Proof-of-Validation

The Bounce Patterns That Reveal Weak Data Hygiene

Why Bounce Rate Spikes Usually Point to Data, Not Domains

The Silent Infrastructure Issues Behind Rising Bounce Rates

How Poor Lead Quality Damages Your Domain at Scale

Why Bounce Reduction Begins Long Before You Hit Send

The Slow Data Drift That Quietly Breaks Your Targeting

Why Aging Data Distorts Your ICP More Than You Realize

The Decay Patterns That Predict When a List Stops Performing

How Data Drift Creates Hidden Misalignment in Outbound

Why Old Company Records Lead to Wrong-Person Outreach

The Missing Data Points That Break Your Targeting Strategy

Why Incomplete Lead Fields Create Hidden Outbound Waste

The Role Field Enrichment Plays in High-Precision Outreach

How Incomplete Company Data Skews Your Segmentation Logic

Why Enriched Leads Outperform Basic Lists Every Time

The Validation Errors Only Humans Can Catch

Why Automated Checks Miss Critical Lead Risks

The Human Review Advantage Most Providers Ignore

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.