Why Automated Checks Miss Critical Lead Risks

Automated validation tools flag surface issues but miss deeper risk signals. Here’s why critical lead risks slip through machine-only checks.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

12/26/20253 min read

Automated validation tools are designed to answer a narrow question: “Can this email be delivered?”

For many teams, that answer feels good enough.

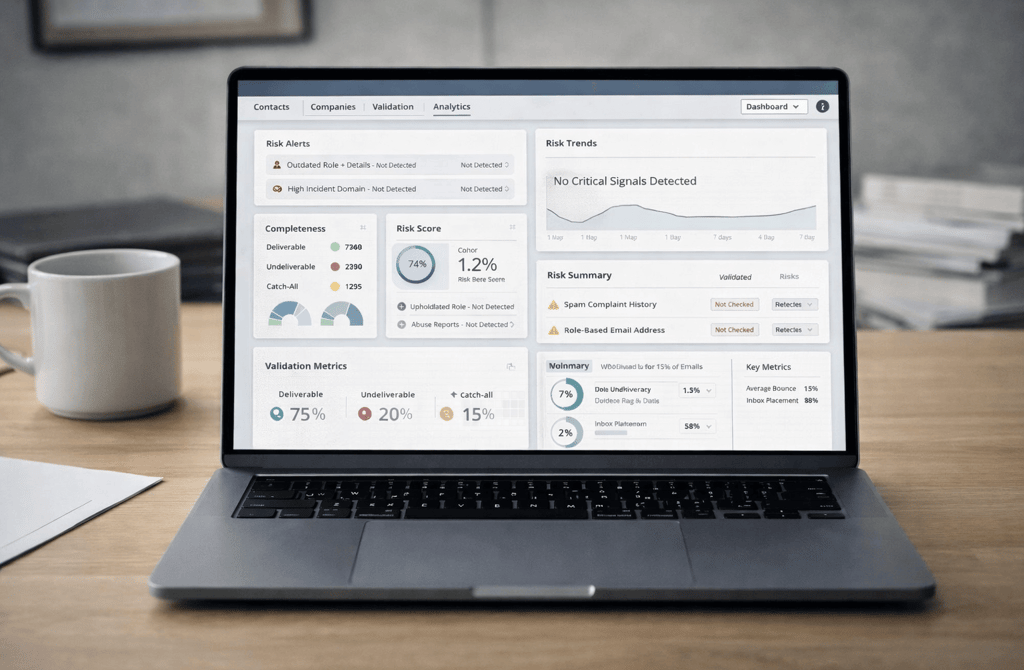

Dashboards turn green. Risk scores look acceptable. Validation percentages clear internal thresholds. Campaigns move forward.

And yet, results quietly underperform.

The problem isn’t that automation is broken. The problem is that automation optimizes for visible metrics, not hidden risk — and outbound failure almost always starts in places metrics don’t measure.

1. Automated systems validate fields, not failure modes

Most automated checks operate at the field level:

Syntax

Domain status

Catch-all detection

Mail server responses

These checks confirm that an email exists, not that it’s safe, relevant, or stable to use in outbound.

Critical lead risks live outside individual fields:

Domains that accept mail but punish cold senders

Roles that technically exist but never engage

Contacts that are reachable but historically non-responsive

Automation answers “Is this valid?”

Outbound needs to answer “What happens after we send?”

2. Risk scoring creates false certainty

Dashboards often collapse complex uncertainty into a single score: 72%, 85%, “low risk.”

This creates a dangerous illusion.

A lead list can score well while still containing:

Role-based inboxes with complaint history

Departments that never reply to cold outreach

Segments that degrade domain reputation slowly

Automated scores are averages.

Deliverability failure is driven by outliers.

Automation hides edge cases — and edge cases are what trigger inbox penalties.

3. Automation can’t detect behavioral risk

Inbox providers don’t evaluate emails in isolation. They observe behavior over time:

Who replies

Who ignores

Who deletes without reading

Who flags messages as unwanted

Automated validation doesn’t model these outcomes. It doesn’t know:

Which roles historically never engage

Which industries generate negative signals

Which segments quietly suppress inbox placement

So while the data passes validation, it fails behavioral scrutiny downstream.

4. Blind spots compound at scale

One risky lead rarely causes damage.

Thousands of similar risky leads do.

Automation struggles with pattern recognition across sends:

It doesn’t see cluster-level risk

It doesn’t adjust when engagement decays

This is why campaigns often look fine in week one, then deteriorate without a clear cause. The risk was always there — it just wasn’t visible in the checks.

5. “No critical issues detected” is not the same as “safe”

Many dashboards explicitly state something like: No critical risks detected.

What that really means is:

No risks we know how to measure were detected.

Automation only flags what it’s trained to see. It cannot:

Question whether the wrong people are being targeted

Detect overrepresented risky segments

Understand when validation rules are too permissive

Absence of alerts is not proof of safety.

It’s proof of measurement limits.

6. Automation optimizes efficiency, not resilience

Automated checks are built to move fast. Outbound systems, however, must survive:

Volume increases

Market shifts

Role churn

Efficiency without resilience creates brittle systems. They work — until they don’t. And when they break, teams often blame copy, cadence, or tooling instead of the data assumptions baked in at the start.

Final thought

Automated validation tools do exactly what they’re designed to do. The failure happens when teams expect them to do more than they can.

They confirm deliverability, not durability.

They surface errors, not systemic risk.

They validate contacts, not outcomes.

Outbound becomes predictable only when teams recognize that passing automated checks is the starting line, not the finish.

Clean-looking dashboards don’t guarantee safe outreach.

Only data that reflects real-world risk produces results you can rely on.

Related Post

Why “Validated” Isn’t Always Valid: The Pitfalls in Modern Data Checks

The Role True Verification Plays in Protecting Domain Reputation

How Weak Validation Layers Inflate Your Deliverability Metrics

Why You Should Never Trust Email Lists Without Proof-of-Validation

The Bounce Patterns That Reveal Weak Data Hygiene

Why Bounce Rate Spikes Usually Point to Data, Not Domains

The Silent Infrastructure Issues Behind Rising Bounce Rates

How Poor Lead Quality Damages Your Domain at Scale

Why Bounce Reduction Begins Long Before You Hit Send

The Slow Data Drift That Quietly Breaks Your Targeting

Why Aging Data Distorts Your ICP More Than You Realize

The Decay Patterns That Predict When a List Stops Performing

How Data Drift Creates Hidden Misalignment in Outbound

Why Old Company Records Lead to Wrong-Person Outreach

The Missing Data Points That Break Your Targeting Strategy

Why Incomplete Lead Fields Create Hidden Outbound Waste

The Role Field Enrichment Plays in High-Precision Outreach

How Incomplete Company Data Skews Your Segmentation Logic

Why Enriched Leads Outperform Basic Lists Every Time

The Validation Errors Only Humans Can Catch

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.