Why “Validated” Isn’t Always Valid: The Pitfalls in Modern Data Checks

Many lead lists are labeled “validated” but still fail in real outreach. This article breaks down the hidden pitfalls in modern data checks and why validation labels often mislead teams.

INDUSTRY INSIGHTSLEAD QUALITY & DATA ACCURACYOUTBOUND STRATEGYB2B DATA STRATEGY

CapLeads Team

12/21/20253 min read

In modern outbound, “validated” has become a marketing term, not a technical guarantee.

Most teams assume validation means an email is safe to send. In reality, it often just means the address passed a narrow set of automated checks designed for speed, not for campaign reliability.

This gap between what validation promises and what it actually confirms is where many outbound failures begin.

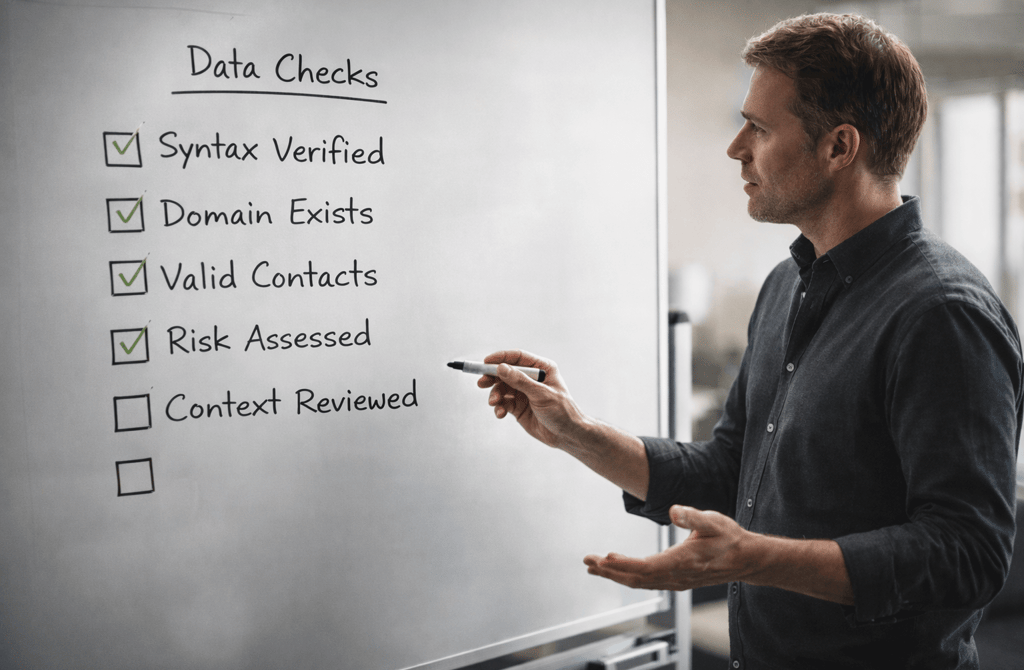

Validation Tools Optimize for Speed, Not Certainty

Most modern data checks are built to process massive volumes quickly. That shapes how they work.

They prioritize:

fast syntax evaluation

domain-level existence checks

lightweight mailbox probing

What they deprioritize:

behavior under sustained sending

domain-specific filtering patterns

inbox reputation sensitivity

role stability and usage context

This isn’t negligence — it’s a design tradeoff. Validation tools are optimized to answer “does this inbox exist?” not “will this inbox tolerate your campaign?”

Those are very different questions.

“Valid” Often Means “Technically Reachable”

A technically reachable inbox can still be a poor outreach target.

Many validated emails fall into categories like:

rarely monitored inboxes

internal routing addresses

shared mailboxes with aggressive filters

inboxes that accept mail but throttle unknown senders

From a system perspective, they pass checks. From an outreach perspective, they introduce noise.

Validation confirms reachability. It does not confirm receptiveness.

Modern Data Checks Ignore Usage Patterns

Validation rarely evaluates how an inbox is used.

An email might be:

active but ignored

active but auto-filtered

active but shielded by layered security

active but deprioritized by recipient behavior

These usage patterns affect deliverability and engagement, but they’re invisible to most validation logic.

As a result, lists look clean while outcomes remain inconsistent.

One-Size Validation Breaks in Real Campaigns

Modern validation treats all emails the same.

But inbox behavior varies dramatically depending on:

company size

industry

role type

internal security posture

historical outbound exposure

A check that works for SMB SaaS leads may be unreliable for enterprise, healthcare, or finance contacts.

When validation ignores these differences, “valid” becomes a misleading label.

Validation Doesn’t Measure Fragility

The biggest blind spot in modern data checks is fragility.

Fragile emails are not invalid — they are easy to break.

They may:

bounce under higher volume

bounce after repeated follow-ups

bounce when reputation signals dip slightly

Validation rarely measures how close an inbox is to rejecting mail. It only confirms that rejection hasn’t happened yet.

That’s why problems appear only after campaigns scale.

Why These Pitfalls Get Blamed on Something Else

When outreach underperforms, teams rarely suspect validation.

They blame:

copy

timing

subject lines

infrastructure

sending volume

Validation gets a free pass because the list was labeled “validated.” But the label never reflected real-world conditions.

The issue wasn’t that validation failed. It’s that validation wasn’t designed to answer the right question.

What “Validated” Should Actually Mean

A useful validation signal should account for:

technical reachability

inbox tolerance under volume

domain filtering strictness

role stability

usage context

Most tools don’t do this — not because it’s impossible, but because it’s harder to productize.

Final Thought

“Validated” is not a finish line. It’s a narrow checkpoint.

When teams rely on modern data checks as guarantees, they confuse technical possibility with campaign readiness. Real validation isn’t about whether an email exists — it’s about whether it will behave predictably once outreach begins.

When validation reflects inbox reality, outbound stays stable.

When validation optimizes only for speed and scale, risk hides behind clean labels.

Related Post

The Channel-Specific Decay Patterns Hidden in Lead Lists

Why Data Accuracy Varies Dramatically Across Regions

The Global Data Gaps Most Outbound Teams Don’t See

How Geographic Differences Shape Lead Reliability

Why Some Countries Produce Cleaner Metadata Than Others

The Cross-Border Factors Behind Data Accuracy Shifts

Why Lead Prices Differ Dramatically Across Industries

The Industry Pricing Patterns Most Buyers Don’t Notice

How Sector Dynamics Shape Lead Cost Structures

Why Some Verticals Produce Higher Cost-Per-Lead Rates

The Price Mechanics Behind Expensive B2B Verticals

The Recency Gaps That Quietly Kill Cold Email Performance

The Age Signals That Predict Whether a Lead Will Ever Respond

How Data Staleness Creates Invisible Pipeline Delays

Why Old Contacts Tank Your Reply Rate Long Before You Noticest

The Simple Recency Rule That Separates High-Intent Prospects from Dead Leads

The Verification Gaps That Create Hidden Bounce Risk

Connect

Get verified leads that drive real results for your business today.

www.capleads.org

© 2025. All rights reserved.

Serving clients worldwide.

CapLeads provides verified B2B datasets with accurate contacts and direct phone numbers. Our data helps startups and sales teams reach C-level executives in FinTech, SaaS, Consulting, and other industries.